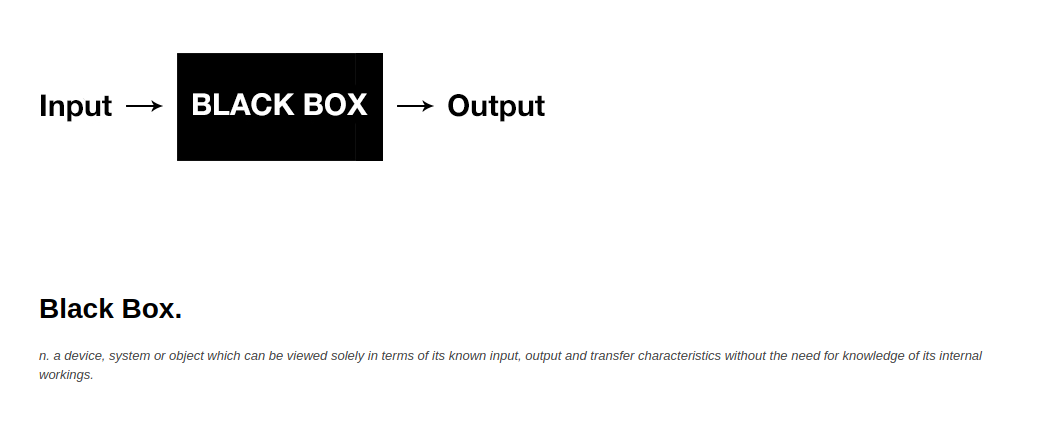

class: center, middle, inverse, title-slide .title[ # All About Squares ] .subtitle[ ## Unpacking the Black Box ] .author[ ### Robert W. Walker ] .date[ ### 2024-10-30 ] --- background-image: url("img/SquaresBG.jpg") class: center, middle --  --- .left-column[ ### Summary Statistics are Insufficient ``` r load(url("https://github.com/robertwwalker/DADMStuff/raw/master/RegressionExamples.RData")) datasaurus %>% group_by(dataset) %>% summarise(Mean.x = mean(x), Std.Dev.x = sd(x), Mean.y = mean(y), Std.Dev.y = sd(y)) %>% kable(format="html") ``` ] .right-column[ <table> <thead> <tr> <th style="text-align:left;"> dataset </th> <th style="text-align:right;"> Mean.x </th> <th style="text-align:right;"> Std.Dev.x </th> <th style="text-align:right;"> Mean.y </th> <th style="text-align:right;"> Std.Dev.y </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> away </td> <td style="text-align:right;"> 54.26610 </td> <td style="text-align:right;"> 16.76983 </td> <td style="text-align:right;"> 47.83472 </td> <td style="text-align:right;"> 26.93974 </td> </tr> <tr> <td style="text-align:left;"> bullseye </td> <td style="text-align:right;"> 54.26873 </td> <td style="text-align:right;"> 16.76924 </td> <td style="text-align:right;"> 47.83082 </td> <td style="text-align:right;"> 26.93573 </td> </tr> <tr> <td style="text-align:left;"> circle </td> <td style="text-align:right;"> 54.26732 </td> <td style="text-align:right;"> 16.76001 </td> <td style="text-align:right;"> 47.83772 </td> <td style="text-align:right;"> 26.93004 </td> </tr> <tr> <td style="text-align:left;"> dino </td> <td style="text-align:right;"> 54.26327 </td> <td style="text-align:right;"> 16.76514 </td> <td style="text-align:right;"> 47.83225 </td> <td style="text-align:right;"> 26.93540 </td> </tr> <tr> <td style="text-align:left;"> dots </td> <td style="text-align:right;"> 54.26030 </td> <td style="text-align:right;"> 16.76774 </td> <td style="text-align:right;"> 47.83983 </td> <td style="text-align:right;"> 26.93019 </td> </tr> <tr> <td style="text-align:left;"> h_lines </td> <td style="text-align:right;"> 54.26144 </td> <td style="text-align:right;"> 16.76590 </td> <td style="text-align:right;"> 47.83025 </td> <td style="text-align:right;"> 26.93988 </td> </tr> <tr> <td style="text-align:left;"> high_lines </td> <td style="text-align:right;"> 54.26881 </td> <td style="text-align:right;"> 16.76670 </td> <td style="text-align:right;"> 47.83545 </td> <td style="text-align:right;"> 26.94000 </td> </tr> <tr> <td style="text-align:left;"> slant_down </td> <td style="text-align:right;"> 54.26785 </td> <td style="text-align:right;"> 16.76676 </td> <td style="text-align:right;"> 47.83590 </td> <td style="text-align:right;"> 26.93610 </td> </tr> <tr> <td style="text-align:left;"> slant_up </td> <td style="text-align:right;"> 54.26588 </td> <td style="text-align:right;"> 16.76885 </td> <td style="text-align:right;"> 47.83150 </td> <td style="text-align:right;"> 26.93861 </td> </tr> <tr> <td style="text-align:left;"> star </td> <td style="text-align:right;"> 54.26734 </td> <td style="text-align:right;"> 16.76896 </td> <td style="text-align:right;"> 47.83955 </td> <td style="text-align:right;"> 26.93027 </td> </tr> <tr> <td style="text-align:left;"> v_lines </td> <td style="text-align:right;"> 54.26993 </td> <td style="text-align:right;"> 16.76996 </td> <td style="text-align:right;"> 47.83699 </td> <td style="text-align:right;"> 26.93768 </td> </tr> <tr> <td style="text-align:left;"> wide_lines </td> <td style="text-align:right;"> 54.26692 </td> <td style="text-align:right;"> 16.77000 </td> <td style="text-align:right;"> 47.83160 </td> <td style="text-align:right;"> 26.93790 </td> </tr> <tr> <td style="text-align:left;"> x_shape </td> <td style="text-align:right;"> 54.26015 </td> <td style="text-align:right;"> 16.76996 </td> <td style="text-align:right;"> 47.83972 </td> <td style="text-align:right;"> 26.93000 </td> </tr> </tbody> </table> ] --- class: center, middle, inverse # Relationships Matter --- .left-column[ ``` r datasaurus %>% ggplot() + aes(x=x, y=y, color=dataset) + geom_point() + guides(color=FALSE) + facet_wrap(vars(dataset)) ``` ] .right-column[ <img src="index_files/figure-html/unnamed-chunk-3-1.svg" width="468" /> ] ## Always Visualize the Data --- class: inverse ## Models Use Inference to ... -- ## Unpack Black Boxes -- ## We will literally do it with boxes/squares --- # Data and a Library ``` r library(tidyverse); library(patchwork); library(skimr) load(url("https://github.com/robertwwalker/DADMStuff/raw/master/RegressionExamples.RData")) source('https://github.com/robertwwalker/DADMStuff/raw/master/ResidPlotter.R') source('https://github.com/robertwwalker/DADMStuff/raw/master/PlotlyReg.R') ``` --- # Pivots Gather/Spread and tidy data Radiant can be picky about data structures. `ggplot` is built around the idea of tidy data from Week 2. How do we make data tidy? At the command line, it is `pivot_wider` and `pivot_longer` while radiant uses the [at some point to be obsolete `spread`/`gather`] --- # A Bit on Covariance and Correlation Covariance is defined as: `$$\sum_{i} (x_{i} - \overline{x})(y_{i} - \overline{y})$$` Where `\(\overline{x}\)` and `\(\overline{y}\)` are the sample means of x and y. The items in parentheses are deviations from the mean; covariance is the product of those deviations from the mean. The metric of covariance is the product of the metric of `\(x\)` and `\(y\)`; often something for which no baseline exists. For this reason, we use a normalized form of covariance known as correlation, often just `\(\rho\)`. `$$\rho_{xy} = \sum_{i} \frac{(x_{i} - \overline{x})(y_{i} - \overline{y})}{s_{x}s_{y}}$$` Correlation is a **normed** measure; it varies between -1 and 1. --- class: inverse ## An Oregon Gender Gap -- .pull-left[ A random sample of salaries in the 1990s for new hires from Oregon DAS. I know *nothing* about them. It is worth noting that these data represent a protected class by Oregon statute. We have a state file or we can load the data from github. ``` r OregonSalaries %>% select(-Obs) %>% mutate(Row = rep(c(1:16),2)) %>% pivot_wider(names_from = Gender, values_from = Salary) %>% select(-Row) ``` ] .pull-right[ ``` # A tibble: 16 × 2 Female Male <dbl> <dbl> 1 41514. 47343. 2 40964. 46382. 3 39170. 45813. 4 37937. 46410. 5 33982. 43796. 6 36077. 43124. 7 39174. 49444. 8 39037. 44806. 9 29132. 44440. 10 36200. 46680. 11 38561. 47337. 12 33248. 47299. 13 33609. 41461. 14 33669. 43598. 15 37806. 43431. 16 35846. 49266. ``` ] --- ## Visualising Independent Samples ``` r ggplot(OregonSalaries) + aes(x=Gender, y=Salary) + geom_boxplot() + theme_xaringan() ``` <img src="index_files/figure-html/unnamed-chunk-5-1.svg" width="468" /> --- ## Visualising Independent Samples ``` r ggplot(OregonSalaries) + aes(x=Gender, y=Salary, fill=Gender) + geom_violin() + theme_xaringan() ``` <img src="index_files/figure-html/unnamed-chunk-6-1.svg" width="468" /> --- ## About Those Squares: Deviations The mean of all salaries is 41142.433. Represented in equation form, we have: `$$Salary_{i} = \alpha + \epsilon_{i}$$` The `\(i^{th}\)` person's salary is some average salary `\(\alpha\)` [or perhaps `\(\mu\)` to maintain conceptual continuity] (shown as a solid blue line) plus some idiosyncratic remainder or residual salary for individual `\(i\)` denoted by `\(\epsilon_{i}\)` (shown as a blue arrow). Everything here is measured in dollars. `$$Salary_{i} = 41142.433 + \epsilon_{i}$$` --- class: left background-image: url("img/Dev1.png") background-position: right -- ``` # A tibble: 16 × 2 Female[,1] Male[,1] <dbl> <dbl> 1 372. 6200. 2 -178. 5240. 3 -1972. 4670. 4 -3206. 5267. 5 -7161. 2654. 6 -5065. 1982. 7 -1968. 8301. 8 -2105. 3663. 9 -12011. 3298. 10 -4942. 5537. 11 -2581. 6195. 12 -7895. 6156. 13 -7533. 319. 14 -7473. 2456. 15 -3337. 2289. 16 -5296. 8124. ``` --- By definition, those vertical distances would/will sum to zero. **This sum to zero constraint is what consumes a degree of freedom; it is why the standard deviation has N-1 degrees of freedom.** The distance from the point to the line is also shown in blue; that is the observed residual salary. It shows how far that individual's salary is from the overall average. --- class: left background-image: url("img/Dev2.png") background-position: right # A Big Black Box -- ``` # A tibble: 16 × 2 Female[,1] Male[,1] <dbl> <dbl> 1 138350. 38442803. 2 31813. 27457097. 3 3889736. 21813358. 4 10277545. 27743644. 5 51274988. 7041698. 6 25655866. 3926692. 7 3874503. 68912991. 8 4431282. 13420200. 9 144256540. 10876058. 10 24423237. 30660131. 11 6661738. 38373872. 12 62323288. 37899934. 13 56745270. 101515. 14 55848872. 6031248. 15 11132919. 5238385. 16 28050778. 65999032. ``` ``` sum(Salary.Deviation.Squared) 1 892955384 ``` --- ## The Black Box of Salaries The total area of the `black box` in the original metric (squared dollars) is: 892955385. The length of each side is the square root of that area, e.g. 29882.36 in dollars.  --- class: inverse background-image: url("img/Dev3.png") background-position: bottom This square, when averaged by degrees of freedom and called *mean square*, has a known distribution, `\(\chi^2\)` *if we assume that the deviations, call them errors, are normal.* --- # The `\(\chi^2\)` distribution The `\(\chi^2\)` [chi-squared] distribution can be derived as the sum of `\(k\)` squared `\(Z\)` or standard normal variables. Because they are squares, they are always positive. The `\(k\)` is called *degrees of freedom*. Differences in `\(\chi^2\)` also have a `\(\chi^2\)` distribution with degrees of freedom equal to the difference in degrees of freedom. As a technical matter (for x > 0): `$$f(x) = \frac{1}{2^{\frac{k}{2}}\Gamma(k/2)} x^{\frac{k}{2-1}}e^{\frac{-x}{2}}$$` --- # Some language and `\(F\)` *Sum of squares*: `$$\sum_{i=1}^{N} e_{i}^{2}$$` *Mean square*: divide the sum of squares by `\(k\)` degrees of freedom. NB: Take the square root and you get the standard deviation. `$$\frac{1}{k}\sum_{i=1}^{N} e_{i}^{2}$$` Fisher's `\(F\)` ratio or the `\(F\)` describes the ratio of two `\(\chi^2\)` variables (mean square) with numerator and denominator degrees of freedom. It is the ratio of [degrees of freedom] averaged squared errors. As the ratio becomes large, we obtain greater evidence that the two are not the same. Let's try that here. --- # The Core Conditional But all of this stems from the presumption that `\(e_{i}\)` is normal; that is a claim that cannot really be proven or disproven. *It is a core assumption that we should try to verify/falsify.* Because the `\(e_{i}\)` being normal means implies that their squares are `\(\chi^2\)`<sup>a</sup> and further that the ratio of their mean-squares are `\(F\)`. As an aside, the technical definition of `\(t\)`<sup>b</sup> is a normal divided by the square root of a `\(\chi^2\)` so `\(t\)` and `\(F\)` must be equivalent with one degree of freedom in the numerator. .footnote[ <sup>a</sup> This is why the various results in prop.test are reported as X-squared. <sup>b</sup> I mentioned this fact previously when introducing t (defined by degrees of freedom and with metric standard error.) ] --- # Verification of the Normal 1. Plots [shape] 2. the quantile-quantile plot 3. Statistical tests --- # plots ``` r plot(density(lm(OregonSalaries$Salary~1)$residuals), main="Residual Salary") ``` <img src="index_files/figure-html/unnamed-chunk-7-1.svg" width="468" /> --- ### the quantile-quantile plot of a normal .pull-left[ The mean of residual salary is zero. The standard deviation is the root-mean-square. Compare observed residual salary [as z] to the hypothetical normal. ``` r data.frame(x=scale(lm(OregonSalaries$Salary~1)$residuals)) %>% arrange(x) %>% mutate(y=qnorm(seq(1, length(OregonSalaries$Salary))/33)) %>% ggplot() + aes(x=x, y=y) + geom_point() ``` ] .pull-right[ <img src="index_files/figure-html/unnamed-chunk-9-1.svg" width="468" /> ] --- ## tests We can examine the claim that residual salary is plausibly normal by examining the slope of the sample and theoretical quantiles: the slope of the q-q plot. This is exactly what the following does under the null hypothesis of a normal. ``` r shapiro.test(lm(Salary~1, data=OregonSalaries)$residuals) ``` ``` Shapiro-Wilk normality test data: lm(Salary ~ 1, data = OregonSalaries)$residuals W = 0.9591, p-value = 0.2595 ``` --- ## Linear Models Will expand on the previous by unpacking the box, reducing residual squares via the inclusion of *features, explanatory variables, explanatory factors, exogenous inputs, predictor variables*. In this case, let us posit that salary is a function of gender. As an equation, that yields: `$$Salary_{i} = \alpha + \beta_{1}*Gender_{i} + \epsilon_{i}$$` We want to measure that `\(\beta\)`; in this case, the difference between Male and Female. By default, R will include Female as the constant. What does the regression imply? That salary for each individual `\(i\)` is a function of a constant and gender. Given the way that R works, `\(\alpha\)` will represent the average for females and `\(\beta\)` will represent the difference between male and female average salaries. The `\(\epsilon\)` will capture the difference between the individual's salary and the average of their group (the mean of males or females). --- ## A New Residual Sum of Squares .pull-left[ The picture will now have a red line and a black line and the residual/leftover/unexplained salary is now the difference between the point and the respective vertical line (red arrows or black arrows). What is the relationship between the datum and the group mean? The answer is shown in black/red. ] .pull-right[ <img src="index_files/figure-html/BasePlot-1.svg" width="468" /> ] --- ## The Squares The sum of the remaining squared vertical distances is 238621277 and is obtained by squaring each black/red number and summing them. The amount explained by gender [measured in squared dollars] is the difference between the sums of blue and black/red numbers, squared. It is important to notice that the highest paid females and the lowest paid males may have more residual salary given two averages but the different averages, overall, lead to far less residual salary than a single average for all salaries. Indeed, gender alone accounts for: ``` r sum(scale(OregonSalaries$Salary, scale = FALSE)^2) - sum(lm(Salary~Gender, data=OregonSalaries)$residuals^2) ``` ``` [1] 654334108 ``` Intuitively, *Gender* should account for 16 times the squared difference between Female and Overall Average (4522) and 16 times the squared difference between Male and Overall Average (4522). ``` r 32*(mean(OregonSalaries$Salary[OregonSalaries$Gender=="Female"], na.rm=TRUE)-mean(OregonSalaries$Salary))^2 ``` ``` [1] 654334108 ``` --- ## A Visual: What Proportion is Accounted For? <img src="index_files/figure-html/BBReg-1.svg" width="468" /> --- ## Regression Tables ``` r Model.1 <- lm(Salary~Gender, data=OregonSalaries) summary(Model.1) ``` ``` Call: lm(formula = Salary ~ Gender, data = OregonSalaries) Residuals: Min 1Q Median 3Q Max -7488.7 -2107.9 433.3 1743.9 4893.9 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 36620.5 705.1 51.94 < 2e-16 *** GenderMale 9043.9 997.1 9.07 0.000000000422 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 Residual standard error: 2820 on 30 degrees of freedom Multiple R-squared: 0.7328, Adjusted R-squared: 0.7239 F-statistic: 82.26 on 1 and 30 DF, p-value: 0.0000000004223 ``` --- class: inverse background-image: url("img/Fbox.png") background-position: bottom background-size: 600px 150px ## Analysis of Variance: The Squares F is the ratio of the two mean squares. It is also `\(t^2\)`. .small[ ``` r anova(Model.1) ``` ``` Analysis of Variance Table Response: Salary Df Sum Sq Mean Sq F value Pr(>F) Gender 1 654334108 654334108 82.264 0.0000000004223 *** Residuals 30 238621277 7954043 --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` ] --- background-image: url("img/F130.jpg") background-size: contain --- background-image: url("img/radiant-ORSlm.jpg") background-size: contain --- class: center, middle, inverse # The Idea Scales to both Quantitative Variables and Multiple Categories --- ### A Multi-Category Example ``` r Model.2 <- lm(Expenditures~Age.Cohort, data=WH) summary(Model.2) ``` ``` Call: lm(formula = Expenditures ~ Age.Cohort, data = WH) Residuals: Min 1Q Median 3Q Max -20157.4 -1175.2 21.9 1160.9 16348.4 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 2224.9 330.0 6.742 3.07e-11 *** Age.Cohort0 - 5 -839.9 584.9 -1.436 0.151368 Age.Cohort13-17 1710.3 443.5 3.856 0.000125 *** Age.Cohort18-21 7816.2 458.7 17.040 < 2e-16 *** Age.Cohort22-50 38142.7 440.1 86.668 < 2e-16 *** Age.Cohort51 + 51042.5 537.3 95.000 < 2e-16 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 Residual standard error: 3863 on 771 degrees of freedom Multiple R-squared: 0.9613, Adjusted R-squared: 0.9611 F-statistic: 3833 on 5 and 771 DF, p-value: < 2.2e-16 ``` --- ## `anova` ``` r anova(Model.2) ``` ``` Analysis of Variance Table Response: Expenditures Df Sum Sq Mean Sq F value Pr(>F) Age.Cohort 5 285981965642 57196393128 3833.3 < 2.2e-16 *** Residuals 771 11503984348 14920862 --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` ``` r confint(Model.2) ``` ``` 2.5 % 97.5 % (Intercept) 1577.0735 2872.7514 Age.Cohort0 - 5 -1988.0158 308.1598 Age.Cohort13-17 839.7131 2580.8856 Age.Cohort18-21 6915.7707 8716.7038 Age.Cohort22-50 37278.7115 39006.5887 Age.Cohort51 + 49987.7479 52097.1984 ``` --- # Not Alot Left.... ``` r black.box.maker(Model.2) ``` <img src="index_files/figure-html/unnamed-chunk-17-1.svg" width="468" /> --- class: center, middle # Bullseye  --- class: inverse ## Messy ``` r qqnorm(residuals(Model.2)) ``` <img src="index_files/figure-html/unnamed-chunk-18-1.svg" width="468" /> --- ## What about Ethnicity? ``` r Model.3 <- lm(Expenditures ~ Ethnicity + Age.Cohort, data=WH) summary(Model.3) ``` ``` Call: lm(formula = Expenditures ~ Ethnicity + Age.Cohort, data = WH) Residuals: Min 1Q Median 3Q Max -20075.0 -1206.7 0.1 1202.1 16446.7 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 2359.9 344.7 6.846 1.55e-11 *** EthnicityWhite not Hispanic -402.1 298.3 -1.348 0.178045 Age.Cohort0 - 5 -849.3 584.6 -1.453 0.146685 Age.Cohort13-17 1733.8 443.6 3.908 0.000101 *** Age.Cohort18-21 7870.0 460.2 17.101 < 2e-16 *** Age.Cohort22-50 38311.5 457.4 83.768 < 2e-16 *** Age.Cohort51 + 51227.2 554.2 92.432 < 2e-16 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 Residual standard error: 3861 on 770 degrees of freedom Multiple R-squared: 0.9614, Adjusted R-squared: 0.9611 F-statistic: 3198 on 6 and 770 DF, p-value: < 2.2e-16 ``` --- class: inverse ``` r confint(Model.3) ``` ``` 2.5 % 97.5 % (Intercept) 1683.2345 3036.6106 EthnicityWhite not Hispanic -987.6405 183.4497 Age.Cohort0 - 5 -1996.8464 298.2797 Age.Cohort13-17 862.9646 2604.5597 Age.Cohort18-21 6966.5797 8773.3519 Age.Cohort22-50 37413.6880 39209.3043 Age.Cohort51 + 50139.2506 52315.1525 ``` --- class: inverse ``` r anova(Model.2, Model.3) ``` ``` Analysis of Variance Table Model 1: Expenditures ~ Age.Cohort Model 2: Expenditures ~ Ethnicity + Age.Cohort Res.Df RSS Df Sum of Sq F Pr(>F) 1 771 11503984348 2 770 11476899027 1 27085321 1.8172 0.178 ``` At the margin, *Ethnicity* accounts for little variance. --- # Some Basic Cost Accounting `$$Total.Cost_{t} = \alpha + \beta*units_t + \epsilon_{t}$$` With data on per period (t) costs and units produced, we can partition fixed `\(\alpha\)` and variable costs `\(\beta\)` (or cost per unit). Consider the data on Handmade Bags. We want to accomplish two things. First, to measure the two key quantities. Second, be able to predict costs for hypothetical number of units. **A comment on correlation** --- # The Data ``` r HMB %>% skim() %>% kable(format = "html", digits = 3) ``` <table> <thead> <tr> <th style="text-align:left;"> skim_type </th> <th style="text-align:left;"> skim_variable </th> <th style="text-align:right;"> n_missing </th> <th style="text-align:right;"> complete_rate </th> <th style="text-align:right;"> numeric.mean </th> <th style="text-align:right;"> numeric.sd </th> <th style="text-align:right;"> numeric.p0 </th> <th style="text-align:right;"> numeric.p25 </th> <th style="text-align:right;"> numeric.p50 </th> <th style="text-align:right;"> numeric.p75 </th> <th style="text-align:right;"> numeric.p100 </th> <th style="text-align:left;"> numeric.hist </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> numeric </td> <td style="text-align:left;"> units </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 351.967 </td> <td style="text-align:right;"> 131.665 </td> <td style="text-align:right;"> 141.00 </td> <td style="text-align:right;"> 241.5 </td> <td style="text-align:right;"> 356.00 </td> <td style="text-align:right;"> 457.0 </td> <td style="text-align:right;"> 580.00 </td> <td style="text-align:left;"> ▇▇▆▇▆ </td> </tr> <tr> <td style="text-align:left;"> numeric </td> <td style="text-align:left;"> TotCost </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 19946.865 </td> <td style="text-align:right;"> 2985.202 </td> <td style="text-align:right;"> 14357.98 </td> <td style="text-align:right;"> 17593.8 </td> <td style="text-align:right;"> 20073.93 </td> <td style="text-align:right;"> 22415.2 </td> <td style="text-align:right;"> 24887.83 </td> <td style="text-align:left;"> ▆▇▇▇▇ </td> </tr> </tbody> </table> --- class: inverse ### A Look at the Data ``` r p1 <- ggplot(HMB) + aes(x=units, y=TotCost) + geom_point() + labs(y="Total Costs per period", x="Units") plotly::ggplotly(p1) ``` <div class="plotly html-widget html-fill-item" id="htmlwidget-0872a7f6bc702dd9e475" style="width:468px;height:432px;"></div> <script type="application/json" data-for="htmlwidget-0872a7f6bc702dd9e475">{"x":{"data":[{"x":[268,279,430,141,412,574,456,196,386,258,450,316,352,292,263,525,143,267,326,460,580,566,176,209,400,447,360,189,151,471,405,506,376,269,483,434,173,217,231,174,186,290,206,172,470,159,544,428,438,490,245,546,554,398,349,332,393,296,517,494],"y":[19624.30773,16790.414919999999,21489.106769999999,15998.026739999999,23771.945670000001,24365.475600000002,23724.837879999999,16928.974829999999,20738.208920000001,17747.662919999999,21480.214510000002,17559.589970000001,19731.90741,19295.200499999999,17828.956999999999,24175.42698,14357.98395,18524.813630000001,20087.481749999999,22301.031340000001,24627.96587,23409.539100000002,15309.48682,16182.97191,20755.601170000002,22430.954320000001,19190.331549999999,18167.357080000002,15246.063050000001,21328.64645,20245.94196,23880.932120000001,20126.656780000001,18376.04508,23284.726979999999,21969.43476,14994.43448,15726.92079,16424.787120000001,17410.359799999998,17605.205809999999,19545.994340000001,15300.08244,17178.100770000001,22409.951349999999,15485.60432,24240.003100000002,20774.9159,21635.60254,22734.845170000001,18000.14228,24441.090390000001,24887.829160000001,20616.763790000001,20561.779330000001,19694.498950000001,20060.386170000002,18999.210159999999,23251.123370000001,23778.029210000001],"text":["units: 268<br />TotCost: 19624.31","units: 279<br />TotCost: 16790.41","units: 430<br />TotCost: 21489.11","units: 141<br />TotCost: 15998.03","units: 412<br />TotCost: 23771.95","units: 574<br />TotCost: 24365.48","units: 456<br />TotCost: 23724.84","units: 196<br />TotCost: 16928.97","units: 386<br />TotCost: 20738.21","units: 258<br />TotCost: 17747.66","units: 450<br />TotCost: 21480.21","units: 316<br />TotCost: 17559.59","units: 352<br />TotCost: 19731.91","units: 292<br />TotCost: 19295.20","units: 263<br />TotCost: 17828.96","units: 525<br />TotCost: 24175.43","units: 143<br />TotCost: 14357.98","units: 267<br />TotCost: 18524.81","units: 326<br />TotCost: 20087.48","units: 460<br />TotCost: 22301.03","units: 580<br />TotCost: 24627.97","units: 566<br />TotCost: 23409.54","units: 176<br />TotCost: 15309.49","units: 209<br />TotCost: 16182.97","units: 400<br />TotCost: 20755.60","units: 447<br />TotCost: 22430.95","units: 360<br />TotCost: 19190.33","units: 189<br />TotCost: 18167.36","units: 151<br />TotCost: 15246.06","units: 471<br />TotCost: 21328.65","units: 405<br />TotCost: 20245.94","units: 506<br />TotCost: 23880.93","units: 376<br />TotCost: 20126.66","units: 269<br />TotCost: 18376.05","units: 483<br />TotCost: 23284.73","units: 434<br />TotCost: 21969.43","units: 173<br />TotCost: 14994.43","units: 217<br />TotCost: 15726.92","units: 231<br />TotCost: 16424.79","units: 174<br />TotCost: 17410.36","units: 186<br />TotCost: 17605.21","units: 290<br />TotCost: 19545.99","units: 206<br />TotCost: 15300.08","units: 172<br />TotCost: 17178.10","units: 470<br />TotCost: 22409.95","units: 159<br />TotCost: 15485.60","units: 544<br />TotCost: 24240.00","units: 428<br />TotCost: 20774.92","units: 438<br />TotCost: 21635.60","units: 490<br />TotCost: 22734.85","units: 245<br />TotCost: 18000.14","units: 546<br />TotCost: 24441.09","units: 554<br />TotCost: 24887.83","units: 398<br />TotCost: 20616.76","units: 349<br />TotCost: 20561.78","units: 332<br />TotCost: 19694.50","units: 393<br />TotCost: 20060.39","units: 296<br />TotCost: 18999.21","units: 517<br />TotCost: 23251.12","units: 494<br />TotCost: 23778.03"],"type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(55,17,66,1)","opacity":1,"size":5.6692913385826778,"symbol":"circle","line":{"width":1.8897637795275593,"color":"rgba(55,17,66,1)"}},"hoveron":"points","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","frame":null}],"layout":{"margin":{"t":23.914764079147645,"r":7.3059360730593621,"b":37.869101978691035,"l":54.794520547945211},"plot_bgcolor":"rgba(235,235,235,1)","paper_bgcolor":"rgba(255,255,255,1)","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724},"xaxis":{"domain":[0,1],"automargin":true,"type":"linear","autorange":false,"range":[119.05,601.95000000000005],"tickmode":"array","ticktext":["200","300","400","500","600"],"tickvals":[200,300,400,500,600],"categoryorder":"array","categoryarray":["200","300","400","500","600"],"nticks":null,"ticks":"outside","tickcolor":"rgba(51,51,51,1)","ticklen":3.6529680365296811,"tickwidth":0.66417600664176002,"showticklabels":true,"tickfont":{"color":"rgba(77,77,77,1)","family":"","size":11.68949771689498},"tickangle":-0,"showline":false,"linecolor":null,"linewidth":0,"showgrid":true,"gridcolor":"rgba(255,255,255,1)","gridwidth":0.66417600664176002,"zeroline":false,"anchor":"y","title":{"text":"Units","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724}},"hoverformat":".2f"},"yaxis":{"domain":[0,1],"automargin":true,"type":"linear","autorange":false,"range":[13831.491689500001,25414.3214205],"tickmode":"array","ticktext":["15000","17500","20000","22500","25000"],"tickvals":[15000,17500,20000,22500,25000],"categoryorder":"array","categoryarray":["15000","17500","20000","22500","25000"],"nticks":null,"ticks":"outside","tickcolor":"rgba(51,51,51,1)","ticklen":3.6529680365296811,"tickwidth":0.66417600664176002,"showticklabels":true,"tickfont":{"color":"rgba(77,77,77,1)","family":"","size":11.68949771689498},"tickangle":-0,"showline":false,"linecolor":null,"linewidth":0,"showgrid":true,"gridcolor":"rgba(255,255,255,1)","gridwidth":0.66417600664176002,"zeroline":false,"anchor":"x","title":{"text":"Total Costs per period","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724}},"hoverformat":".2f"},"shapes":[{"type":"rect","fillcolor":null,"line":{"color":null,"width":0,"linetype":[]},"yref":"paper","xref":"paper","x0":0,"x1":1,"y0":0,"y1":1}],"showlegend":false,"legend":{"bgcolor":"rgba(255,255,255,1)","bordercolor":"transparent","borderwidth":1.8897637795275593,"font":{"color":"rgba(0,0,0,1)","family":"","size":11.68949771689498}},"hovermode":"closest","barmode":"relative"},"config":{"doubleClick":"reset","modeBarButtonsToAdd":["hoverclosest","hovercompare"],"showSendToCloud":false},"source":"A","attrs":{"ec0175561ec":{"x":{},"y":{},"type":"scatter"}},"cur_data":"ec0175561ec","visdat":{"ec0175561ec":["function (y) ","x"]},"highlight":{"on":"plotly_click","persistent":false,"dynamic":false,"selectize":false,"opacityDim":0.20000000000000001,"selected":{"opacity":1},"debounce":0},"shinyEvents":["plotly_hover","plotly_click","plotly_selected","plotly_relayout","plotly_brushed","plotly_brushing","plotly_clickannotation","plotly_doubleclick","plotly_deselect","plotly_afterplot","plotly_sunburstclick"],"base_url":"https://plot.ly"},"evals":[],"jsHooks":[]}</script> --- ## The Thing to Measure ``` r p1 <- ggplot(HMB) + aes(x=units, y=TotCost) + geom_point() + geom_smooth(method="lm") + labs(y="Total Costs per period", x="Units") plotly::ggplotly(p1) ``` <div class="plotly html-widget html-fill-item" id="htmlwidget-472f792603288e418c48" style="width:468px;height:432px;"></div> <script type="application/json" data-for="htmlwidget-472f792603288e418c48">{"x":{"data":[{"x":[268,279,430,141,412,574,456,196,386,258,450,316,352,292,263,525,143,267,326,460,580,566,176,209,400,447,360,189,151,471,405,506,376,269,483,434,173,217,231,174,186,290,206,172,470,159,544,428,438,490,245,546,554,398,349,332,393,296,517,494],"y":[19624.30773,16790.414919999999,21489.106769999999,15998.026739999999,23771.945670000001,24365.475600000002,23724.837879999999,16928.974829999999,20738.208920000001,17747.662919999999,21480.214510000002,17559.589970000001,19731.90741,19295.200499999999,17828.956999999999,24175.42698,14357.98395,18524.813630000001,20087.481749999999,22301.031340000001,24627.96587,23409.539100000002,15309.48682,16182.97191,20755.601170000002,22430.954320000001,19190.331549999999,18167.357080000002,15246.063050000001,21328.64645,20245.94196,23880.932120000001,20126.656780000001,18376.04508,23284.726979999999,21969.43476,14994.43448,15726.92079,16424.787120000001,17410.359799999998,17605.205809999999,19545.994340000001,15300.08244,17178.100770000001,22409.951349999999,15485.60432,24240.003100000002,20774.9159,21635.60254,22734.845170000001,18000.14228,24441.090390000001,24887.829160000001,20616.763790000001,20561.779330000001,19694.498950000001,20060.386170000002,18999.210159999999,23251.123370000001,23778.029210000001],"text":["units: 268<br />TotCost: 19624.31","units: 279<br />TotCost: 16790.41","units: 430<br />TotCost: 21489.11","units: 141<br />TotCost: 15998.03","units: 412<br />TotCost: 23771.95","units: 574<br />TotCost: 24365.48","units: 456<br />TotCost: 23724.84","units: 196<br />TotCost: 16928.97","units: 386<br />TotCost: 20738.21","units: 258<br />TotCost: 17747.66","units: 450<br />TotCost: 21480.21","units: 316<br />TotCost: 17559.59","units: 352<br />TotCost: 19731.91","units: 292<br />TotCost: 19295.20","units: 263<br />TotCost: 17828.96","units: 525<br />TotCost: 24175.43","units: 143<br />TotCost: 14357.98","units: 267<br />TotCost: 18524.81","units: 326<br />TotCost: 20087.48","units: 460<br />TotCost: 22301.03","units: 580<br />TotCost: 24627.97","units: 566<br />TotCost: 23409.54","units: 176<br />TotCost: 15309.49","units: 209<br />TotCost: 16182.97","units: 400<br />TotCost: 20755.60","units: 447<br />TotCost: 22430.95","units: 360<br />TotCost: 19190.33","units: 189<br />TotCost: 18167.36","units: 151<br />TotCost: 15246.06","units: 471<br />TotCost: 21328.65","units: 405<br />TotCost: 20245.94","units: 506<br />TotCost: 23880.93","units: 376<br />TotCost: 20126.66","units: 269<br />TotCost: 18376.05","units: 483<br />TotCost: 23284.73","units: 434<br />TotCost: 21969.43","units: 173<br />TotCost: 14994.43","units: 217<br />TotCost: 15726.92","units: 231<br />TotCost: 16424.79","units: 174<br />TotCost: 17410.36","units: 186<br />TotCost: 17605.21","units: 290<br />TotCost: 19545.99","units: 206<br />TotCost: 15300.08","units: 172<br />TotCost: 17178.10","units: 470<br />TotCost: 22409.95","units: 159<br />TotCost: 15485.60","units: 544<br />TotCost: 24240.00","units: 428<br />TotCost: 20774.92","units: 438<br />TotCost: 21635.60","units: 490<br />TotCost: 22734.85","units: 245<br />TotCost: 18000.14","units: 546<br />TotCost: 24441.09","units: 554<br />TotCost: 24887.83","units: 398<br />TotCost: 20616.76","units: 349<br />TotCost: 20561.78","units: 332<br />TotCost: 19694.50","units: 393<br />TotCost: 20060.39","units: 296<br />TotCost: 18999.21","units: 517<br />TotCost: 23251.12","units: 494<br />TotCost: 23778.03"],"type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(55,17,66,1)","opacity":1,"size":5.6692913385826778,"symbol":"circle","line":{"width":1.8897637795275593,"color":"rgba(55,17,66,1)"}},"hoveron":"points","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","frame":null},{"x":[141,146.55696202531647,152.1139240506329,157.67088607594937,163.22784810126583,168.78481012658227,174.34177215189874,179.8987341772152,185.45569620253164,191.01265822784811,196.56962025316454,202.12658227848101,207.68354430379748,213.24050632911394,218.79746835443038,224.35443037974684,229.91139240506328,235.46835443037975,241.02531645569621,246.58227848101268,252.13924050632912,257.69620253164555,263.25316455696202,268.81012658227849,274.36708860759495,279.92405063291142,285.48101265822788,291.03797468354435,296.59493670886076,302.15189873417722,307.70886075949369,313.2658227848101,318.82278481012656,324.37974683544303,329.9367088607595,335.49367088607596,341.05063291139243,346.60759493670889,352.16455696202536,357.72151898734182,363.27848101265823,368.8354430379747,374.39240506329116,379.94936708860757,385.50632911392404,391.0632911392405,396.62025316455697,402.17721518987344,407.7341772151899,413.29113924050637,418.84810126582278,424.40506329113924,429.96202531645571,435.51898734177217,441.07594936708864,446.63291139240511,452.18987341772151,457.74683544303798,463.30379746835445,468.86075949367091,474.41772151898738,479.97468354430384,485.53164556962025,491.08860759493672,496.64556962025318,502.20253164556965,507.75949367088612,513.31645569620252,518.87341772151899,524.43037974683546,529.98734177215192,535.54430379746839,541.10126582278485,546.65822784810121,552.21518987341778,557.77215189873414,563.32911392405072,568.88607594936707,574.44303797468365,580],"y":[15368.560853892319,15489.15554911495,15609.75024433758,15730.344939560211,15850.939634782841,15971.534330005472,16092.129025228103,16212.723720450733,16333.318415673362,16453.913110895992,16574.507806118625,16695.102501341255,16815.697196563884,16936.291891786517,17056.886587009147,17177.481282231776,17298.075977454406,17418.670672677035,17539.265367899668,17659.860063122298,17780.454758344928,17901.049453567561,18021.64414879019,18142.23884401282,18262.833539235449,18383.428234458082,18504.022929680712,18624.617624903345,18745.212320125975,18865.807015348604,18986.401710571234,19106.996405793863,19227.591101016493,19348.185796239126,19468.780491461755,19589.375186684385,19709.969881907018,19830.564577129648,19951.159272352277,20071.753967574907,20192.348662797536,20312.943358020169,20433.538053242799,20554.132748465428,20674.727443688062,20795.322138910691,20915.916834133321,21036.51152935595,21157.106224578583,21277.700919801213,21398.295615023842,21518.890310246476,21639.485005469105,21760.079700691735,21880.674395914364,22001.269091136994,22121.863786359623,22242.458481582256,22363.05317680489,22483.647872027519,22604.242567250149,22724.837262472778,22845.431957695408,22966.026652918037,23086.62134814067,23207.216043363303,23327.810738585933,23448.405433808563,23569.000129031192,23689.594824253822,23810.189519476451,23930.784214699084,24051.378909921714,24171.973605144343,24292.568300366976,24413.162995589606,24533.757690812236,24654.352386034865,24774.947081257498,24895.541776480124],"text":["units: 141.0000<br />TotCost: 15368.56","units: 146.5570<br />TotCost: 15489.16","units: 152.1139<br />TotCost: 15609.75","units: 157.6709<br />TotCost: 15730.34","units: 163.2278<br />TotCost: 15850.94","units: 168.7848<br />TotCost: 15971.53","units: 174.3418<br />TotCost: 16092.13","units: 179.8987<br />TotCost: 16212.72","units: 185.4557<br />TotCost: 16333.32","units: 191.0127<br />TotCost: 16453.91","units: 196.5696<br />TotCost: 16574.51","units: 202.1266<br />TotCost: 16695.10","units: 207.6835<br />TotCost: 16815.70","units: 213.2405<br />TotCost: 16936.29","units: 218.7975<br />TotCost: 17056.89","units: 224.3544<br />TotCost: 17177.48","units: 229.9114<br />TotCost: 17298.08","units: 235.4684<br />TotCost: 17418.67","units: 241.0253<br />TotCost: 17539.27","units: 246.5823<br />TotCost: 17659.86","units: 252.1392<br />TotCost: 17780.45","units: 257.6962<br />TotCost: 17901.05","units: 263.2532<br />TotCost: 18021.64","units: 268.8101<br />TotCost: 18142.24","units: 274.3671<br />TotCost: 18262.83","units: 279.9241<br />TotCost: 18383.43","units: 285.4810<br />TotCost: 18504.02","units: 291.0380<br />TotCost: 18624.62","units: 296.5949<br />TotCost: 18745.21","units: 302.1519<br />TotCost: 18865.81","units: 307.7089<br />TotCost: 18986.40","units: 313.2658<br />TotCost: 19107.00","units: 318.8228<br />TotCost: 19227.59","units: 324.3797<br />TotCost: 19348.19","units: 329.9367<br />TotCost: 19468.78","units: 335.4937<br />TotCost: 19589.38","units: 341.0506<br />TotCost: 19709.97","units: 346.6076<br />TotCost: 19830.56","units: 352.1646<br />TotCost: 19951.16","units: 357.7215<br />TotCost: 20071.75","units: 363.2785<br />TotCost: 20192.35","units: 368.8354<br />TotCost: 20312.94","units: 374.3924<br />TotCost: 20433.54","units: 379.9494<br />TotCost: 20554.13","units: 385.5063<br />TotCost: 20674.73","units: 391.0633<br />TotCost: 20795.32","units: 396.6203<br />TotCost: 20915.92","units: 402.1772<br />TotCost: 21036.51","units: 407.7342<br />TotCost: 21157.11","units: 413.2911<br />TotCost: 21277.70","units: 418.8481<br />TotCost: 21398.30","units: 424.4051<br />TotCost: 21518.89","units: 429.9620<br />TotCost: 21639.49","units: 435.5190<br />TotCost: 21760.08","units: 441.0759<br />TotCost: 21880.67","units: 446.6329<br />TotCost: 22001.27","units: 452.1899<br />TotCost: 22121.86","units: 457.7468<br />TotCost: 22242.46","units: 463.3038<br />TotCost: 22363.05","units: 468.8608<br />TotCost: 22483.65","units: 474.4177<br />TotCost: 22604.24","units: 479.9747<br />TotCost: 22724.84","units: 485.5316<br />TotCost: 22845.43","units: 491.0886<br />TotCost: 22966.03","units: 496.6456<br />TotCost: 23086.62","units: 502.2025<br />TotCost: 23207.22","units: 507.7595<br />TotCost: 23327.81","units: 513.3165<br />TotCost: 23448.41","units: 518.8734<br />TotCost: 23569.00","units: 524.4304<br />TotCost: 23689.59","units: 529.9873<br />TotCost: 23810.19","units: 535.5443<br />TotCost: 23930.78","units: 541.1013<br />TotCost: 24051.38","units: 546.6582<br />TotCost: 24171.97","units: 552.2152<br />TotCost: 24292.57","units: 557.7722<br />TotCost: 24413.16","units: 563.3291<br />TotCost: 24533.76","units: 568.8861<br />TotCost: 24654.35","units: 574.4430<br />TotCost: 24774.95","units: 580.0000<br />TotCost: 24895.54"],"type":"scatter","mode":"lines","name":"fitted values","line":{"width":3.7795275590551185,"color":"rgba(28,82,83,1)","dash":"solid"},"hoveron":"points","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","frame":null},{"x":[141,146.55696202531647,152.1139240506329,157.67088607594937,163.22784810126583,168.78481012658227,174.34177215189874,179.8987341772152,185.45569620253164,191.01265822784811,196.56962025316454,202.12658227848101,207.68354430379748,213.24050632911394,218.79746835443038,224.35443037974684,229.91139240506328,235.46835443037975,241.02531645569621,246.58227848101268,252.13924050632912,257.69620253164555,263.25316455696202,268.81012658227849,274.36708860759495,279.92405063291142,285.48101265822788,291.03797468354435,296.59493670886076,302.15189873417722,307.70886075949369,313.2658227848101,318.82278481012656,324.37974683544303,329.9367088607595,335.49367088607596,341.05063291139243,346.60759493670889,352.16455696202536,357.72151898734182,363.27848101265823,368.8354430379747,374.39240506329116,379.94936708860757,385.50632911392404,391.0632911392405,396.62025316455697,402.17721518987344,407.7341772151899,413.29113924050637,418.84810126582278,424.40506329113924,429.96202531645571,435.51898734177217,441.07594936708864,446.63291139240511,452.18987341772151,457.74683544303798,463.30379746835445,468.86075949367091,474.41772151898738,479.97468354430384,485.53164556962025,491.08860759493672,496.64556962025318,502.20253164556965,507.75949367088612,513.31645569620252,518.87341772151899,524.43037974683546,529.98734177215192,535.54430379746839,541.10126582278485,546.65822784810121,552.21518987341778,557.77215189873414,563.32911392405072,568.88607594936707,574.44303797468365,580,580,580,574.44303797468365,568.88607594936707,563.32911392405072,557.77215189873414,552.21518987341778,546.65822784810121,541.10126582278485,535.54430379746839,529.98734177215192,524.43037974683546,518.87341772151899,513.31645569620252,507.75949367088612,502.20253164556965,496.64556962025318,491.08860759493672,485.53164556962025,479.97468354430384,474.41772151898738,468.86075949367091,463.30379746835445,457.74683544303798,452.18987341772151,446.63291139240511,441.07594936708864,435.51898734177217,429.96202531645571,424.40506329113924,418.84810126582278,413.29113924050637,407.7341772151899,402.17721518987344,396.62025316455697,391.0632911392405,385.50632911392404,379.94936708860757,374.39240506329116,368.8354430379747,363.27848101265823,357.72151898734182,352.16455696202536,346.60759493670889,341.05063291139243,335.49367088607596,329.9367088607595,324.37974683544303,318.82278481012656,313.2658227848101,307.70886075949369,302.15189873417722,296.59493670886076,291.03797468354435,285.48101265822788,279.92405063291142,274.36708860759495,268.81012658227849,263.25316455696202,257.69620253164555,252.13924050632912,246.58227848101268,241.02531645569621,235.46835443037975,229.91139240506328,224.35443037974684,218.79746835443038,213.24050632911394,207.68354430379748,202.12658227848101,196.56962025316454,191.01265822784811,185.45569620253164,179.8987341772152,174.34177215189874,168.78481012658227,163.22784810126583,157.67088607594937,152.1139240506329,146.55696202531647,141,141],"y":[14940.464323439579,15069.18212278086,15197.836905755834,15326.424899936072,15454.94206086096,15583.384051211669,15711.7462186542,15840.023572369177,15968.210758321957,16096.302033373371,16224.291238391181,16352.171770597661,16479.936555482294,16607.578018723107,16735.088058698624,16862.458020336835,16989.67867123938,17116.740181238889,17243.632106792062,17370.343381876126,17496.862317331208,17623.176610860533,17749.273370141502,17875.139151682521,18000.76001814293,18126.121616769451,18251.209281337629,18376.008159467379,18500.503366358818,18624.680164838508,18748.524170114677,18872.021575856597,18995.159396234045,19117.925716534144,19240.309943125529,19362.303042109121,19483.897755229256,19605.088781726139,19725.872915910692,19846.249132331297,19966.218613331719,20085.784717290349,20204.952889510179,20323.730521191563,20442.126764797194,20560.15231614028,20677.819174554559,20795.140392544723,20912.129825495234,21028.801890546802,21145.171341882418,21261.253067642458,21377.061911718818,21492.612521912761,21607.91922447276,21722.995923896717,21837.856026082947,21952.512382414749,22066.977252114659,22181.262280152609,22295.378488083359,22409.336275375754,22523.145429040411,22636.815139633003,22750.354021984833,22863.770139276192,22977.071029311272,23090.263732070864,23203.354817809075,23316.350415122881,23429.256238559788,23542.077615442005,23654.819511677622,23767.48655640351,23880.083065363266,23992.613062969587,24105.080303035618,24217.488288186454,24329.840287981468,24442.139355792191,24442.139355792191,25348.944197168057,25220.053874533529,25091.216483883276,24962.435078588853,24833.712928209625,24705.053535370687,24576.460653885177,24447.938308165805,24319.490813956163,24191.122800393114,24062.839233384762,23934.645440253309,23806.547135546261,23678.550447860594,23550.661947450415,23422.888674296508,23295.238166203071,23167.718486350404,23040.338249569802,22913.106646416938,22786.03346390243,22659.12910149512,22532.404580749764,22405.871546636299,22279.542258377271,22153.429567355968,22027.546879470709,21901.908099219392,21776.527552850494,21651.419888165266,21526.599949055624,21402.082623661932,21277.882666167177,21154.014493712082,21030.491961681102,20907.328122578929,20784.534975739294,20662.123216975418,20540.101998749989,20418.478712263353,20297.258802818516,20176.445628793863,20056.040372533156,19936.04200858478,19816.447331259649,19697.251039797982,19578.445875944108,19460.022805798941,19341.971235731129,19224.27925102779,19106.9338658587,18989.921273893131,18873.227090339311,18756.836578023795,18640.734852146714,18524.907060327969,18409.338536343119,18294.014927438879,18178.922296274588,18064.047199358647,17949.37674436847,17834.898629007275,17720.601164115182,17606.473283669431,17492.504544126718,17378.685115319669,17265.005764849928,17151.457837645474,17038.033232084847,16924.724373846071,16811.524188418614,16698.426073024766,16585.423868532289,16472.511831802007,16359.684608799274,16246.937208704721,16134.264979184351,16021.663582919326,15909.128975449041,15796.657384345059,14940.464323439579],"text":["units: 141.0000<br />TotCost: 15368.56","units: 146.5570<br />TotCost: 15489.16","units: 152.1139<br />TotCost: 15609.75","units: 157.6709<br />TotCost: 15730.34","units: 163.2278<br />TotCost: 15850.94","units: 168.7848<br />TotCost: 15971.53","units: 174.3418<br />TotCost: 16092.13","units: 179.8987<br />TotCost: 16212.72","units: 185.4557<br />TotCost: 16333.32","units: 191.0127<br />TotCost: 16453.91","units: 196.5696<br />TotCost: 16574.51","units: 202.1266<br />TotCost: 16695.10","units: 207.6835<br />TotCost: 16815.70","units: 213.2405<br />TotCost: 16936.29","units: 218.7975<br />TotCost: 17056.89","units: 224.3544<br />TotCost: 17177.48","units: 229.9114<br />TotCost: 17298.08","units: 235.4684<br />TotCost: 17418.67","units: 241.0253<br />TotCost: 17539.27","units: 246.5823<br />TotCost: 17659.86","units: 252.1392<br />TotCost: 17780.45","units: 257.6962<br />TotCost: 17901.05","units: 263.2532<br />TotCost: 18021.64","units: 268.8101<br />TotCost: 18142.24","units: 274.3671<br />TotCost: 18262.83","units: 279.9241<br />TotCost: 18383.43","units: 285.4810<br />TotCost: 18504.02","units: 291.0380<br />TotCost: 18624.62","units: 296.5949<br />TotCost: 18745.21","units: 302.1519<br />TotCost: 18865.81","units: 307.7089<br />TotCost: 18986.40","units: 313.2658<br />TotCost: 19107.00","units: 318.8228<br />TotCost: 19227.59","units: 324.3797<br />TotCost: 19348.19","units: 329.9367<br />TotCost: 19468.78","units: 335.4937<br />TotCost: 19589.38","units: 341.0506<br />TotCost: 19709.97","units: 346.6076<br />TotCost: 19830.56","units: 352.1646<br />TotCost: 19951.16","units: 357.7215<br />TotCost: 20071.75","units: 363.2785<br />TotCost: 20192.35","units: 368.8354<br />TotCost: 20312.94","units: 374.3924<br />TotCost: 20433.54","units: 379.9494<br />TotCost: 20554.13","units: 385.5063<br />TotCost: 20674.73","units: 391.0633<br />TotCost: 20795.32","units: 396.6203<br />TotCost: 20915.92","units: 402.1772<br />TotCost: 21036.51","units: 407.7342<br />TotCost: 21157.11","units: 413.2911<br />TotCost: 21277.70","units: 418.8481<br />TotCost: 21398.30","units: 424.4051<br />TotCost: 21518.89","units: 429.9620<br />TotCost: 21639.49","units: 435.5190<br />TotCost: 21760.08","units: 441.0759<br />TotCost: 21880.67","units: 446.6329<br />TotCost: 22001.27","units: 452.1899<br />TotCost: 22121.86","units: 457.7468<br />TotCost: 22242.46","units: 463.3038<br />TotCost: 22363.05","units: 468.8608<br />TotCost: 22483.65","units: 474.4177<br />TotCost: 22604.24","units: 479.9747<br />TotCost: 22724.84","units: 485.5316<br />TotCost: 22845.43","units: 491.0886<br />TotCost: 22966.03","units: 496.6456<br />TotCost: 23086.62","units: 502.2025<br />TotCost: 23207.22","units: 507.7595<br />TotCost: 23327.81","units: 513.3165<br />TotCost: 23448.41","units: 518.8734<br />TotCost: 23569.00","units: 524.4304<br />TotCost: 23689.59","units: 529.9873<br />TotCost: 23810.19","units: 535.5443<br />TotCost: 23930.78","units: 541.1013<br />TotCost: 24051.38","units: 546.6582<br />TotCost: 24171.97","units: 552.2152<br />TotCost: 24292.57","units: 557.7722<br />TotCost: 24413.16","units: 563.3291<br />TotCost: 24533.76","units: 568.8861<br />TotCost: 24654.35","units: 574.4430<br />TotCost: 24774.95","units: 580.0000<br />TotCost: 24895.54","units: 580.0000<br />TotCost: 24895.54","units: 580.0000<br />TotCost: 24895.54","units: 574.4430<br />TotCost: 24774.95","units: 568.8861<br />TotCost: 24654.35","units: 563.3291<br />TotCost: 24533.76","units: 557.7722<br />TotCost: 24413.16","units: 552.2152<br />TotCost: 24292.57","units: 546.6582<br />TotCost: 24171.97","units: 541.1013<br />TotCost: 24051.38","units: 535.5443<br />TotCost: 23930.78","units: 529.9873<br />TotCost: 23810.19","units: 524.4304<br />TotCost: 23689.59","units: 518.8734<br />TotCost: 23569.00","units: 513.3165<br />TotCost: 23448.41","units: 507.7595<br />TotCost: 23327.81","units: 502.2025<br />TotCost: 23207.22","units: 496.6456<br />TotCost: 23086.62","units: 491.0886<br />TotCost: 22966.03","units: 485.5316<br />TotCost: 22845.43","units: 479.9747<br />TotCost: 22724.84","units: 474.4177<br />TotCost: 22604.24","units: 468.8608<br />TotCost: 22483.65","units: 463.3038<br />TotCost: 22363.05","units: 457.7468<br />TotCost: 22242.46","units: 452.1899<br />TotCost: 22121.86","units: 446.6329<br />TotCost: 22001.27","units: 441.0759<br />TotCost: 21880.67","units: 435.5190<br />TotCost: 21760.08","units: 429.9620<br />TotCost: 21639.49","units: 424.4051<br />TotCost: 21518.89","units: 418.8481<br />TotCost: 21398.30","units: 413.2911<br />TotCost: 21277.70","units: 407.7342<br />TotCost: 21157.11","units: 402.1772<br />TotCost: 21036.51","units: 396.6203<br />TotCost: 20915.92","units: 391.0633<br />TotCost: 20795.32","units: 385.5063<br />TotCost: 20674.73","units: 379.9494<br />TotCost: 20554.13","units: 374.3924<br />TotCost: 20433.54","units: 368.8354<br />TotCost: 20312.94","units: 363.2785<br />TotCost: 20192.35","units: 357.7215<br />TotCost: 20071.75","units: 352.1646<br />TotCost: 19951.16","units: 346.6076<br />TotCost: 19830.56","units: 341.0506<br />TotCost: 19709.97","units: 335.4937<br />TotCost: 19589.38","units: 329.9367<br />TotCost: 19468.78","units: 324.3797<br />TotCost: 19348.19","units: 318.8228<br />TotCost: 19227.59","units: 313.2658<br />TotCost: 19107.00","units: 307.7089<br />TotCost: 18986.40","units: 302.1519<br />TotCost: 18865.81","units: 296.5949<br />TotCost: 18745.21","units: 291.0380<br />TotCost: 18624.62","units: 285.4810<br />TotCost: 18504.02","units: 279.9241<br />TotCost: 18383.43","units: 274.3671<br />TotCost: 18262.83","units: 268.8101<br />TotCost: 18142.24","units: 263.2532<br />TotCost: 18021.64","units: 257.6962<br />TotCost: 17901.05","units: 252.1392<br />TotCost: 17780.45","units: 246.5823<br />TotCost: 17659.86","units: 241.0253<br />TotCost: 17539.27","units: 235.4684<br />TotCost: 17418.67","units: 229.9114<br />TotCost: 17298.08","units: 224.3544<br />TotCost: 17177.48","units: 218.7975<br />TotCost: 17056.89","units: 213.2405<br />TotCost: 16936.29","units: 207.6835<br />TotCost: 16815.70","units: 202.1266<br />TotCost: 16695.10","units: 196.5696<br />TotCost: 16574.51","units: 191.0127<br />TotCost: 16453.91","units: 185.4557<br />TotCost: 16333.32","units: 179.8987<br />TotCost: 16212.72","units: 174.3418<br />TotCost: 16092.13","units: 168.7848<br />TotCost: 15971.53","units: 163.2278<br />TotCost: 15850.94","units: 157.6709<br />TotCost: 15730.34","units: 152.1139<br />TotCost: 15609.75","units: 146.5570<br />TotCost: 15489.16","units: 141.0000<br />TotCost: 15368.56","units: 141.0000<br />TotCost: 15368.56"],"type":"scatter","mode":"lines","line":{"width":3.7795275590551185,"color":"rgba(55,17,66,0.4)","dash":"solid"},"fill":"toself","fillcolor":"rgba(191,191,191,0.4)","hoveron":"points","hoverinfo":"x+y","showlegend":false,"xaxis":"x","yaxis":"y","frame":null}],"layout":{"margin":{"t":23.914764079147645,"r":7.3059360730593621,"b":37.869101978691035,"l":54.794520547945211},"plot_bgcolor":"rgba(235,235,235,1)","paper_bgcolor":"rgba(255,255,255,1)","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724},"xaxis":{"domain":[0,1],"automargin":true,"type":"linear","autorange":false,"range":[119.05,601.95000000000005],"tickmode":"array","ticktext":["200","300","400","500","600"],"tickvals":[200,300,400,500,600],"categoryorder":"array","categoryarray":["200","300","400","500","600"],"nticks":null,"ticks":"outside","tickcolor":"rgba(51,51,51,1)","ticklen":3.6529680365296811,"tickwidth":0.66417600664176002,"showticklabels":true,"tickfont":{"color":"rgba(77,77,77,1)","family":"","size":11.68949771689498},"tickangle":-0,"showline":false,"linecolor":null,"linewidth":0,"showgrid":true,"gridcolor":"rgba(255,255,255,1)","gridwidth":0.66417600664176002,"zeroline":false,"anchor":"y","title":{"text":"Units","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724}},"hoverformat":".2f"},"yaxis":{"domain":[0,1],"automargin":true,"type":"linear","autorange":false,"range":[13808.435937641598,25898.492209526459],"tickmode":"array","ticktext":["14000","16000","18000","20000","22000","24000"],"tickvals":[14000,16000,18000,20000,22000,24000],"categoryorder":"array","categoryarray":["14000","16000","18000","20000","22000","24000"],"nticks":null,"ticks":"outside","tickcolor":"rgba(51,51,51,1)","ticklen":3.6529680365296811,"tickwidth":0.66417600664176002,"showticklabels":true,"tickfont":{"color":"rgba(77,77,77,1)","family":"","size":11.68949771689498},"tickangle":-0,"showline":false,"linecolor":null,"linewidth":0,"showgrid":true,"gridcolor":"rgba(255,255,255,1)","gridwidth":0.66417600664176002,"zeroline":false,"anchor":"x","title":{"text":"Total Costs per period","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724}},"hoverformat":".2f"},"shapes":[{"type":"rect","fillcolor":null,"line":{"color":null,"width":0,"linetype":[]},"yref":"paper","xref":"paper","x0":0,"x1":1,"y0":0,"y1":1}],"showlegend":false,"legend":{"bgcolor":"rgba(255,255,255,1)","bordercolor":"transparent","borderwidth":1.8897637795275593,"font":{"color":"rgba(0,0,0,1)","family":"","size":11.68949771689498}},"hovermode":"closest","barmode":"relative"},"config":{"doubleClick":"reset","modeBarButtonsToAdd":["hoverclosest","hovercompare"],"showSendToCloud":false},"source":"A","attrs":{"ec017e4fd93b":{"x":{},"y":{},"type":"scatter"},"ec012c3ef146":{"x":{},"y":{}}},"cur_data":"ec017e4fd93b","visdat":{"ec017e4fd93b":["function (y) ","x"],"ec012c3ef146":["function (y) ","x"]},"highlight":{"on":"plotly_click","persistent":false,"dynamic":false,"selectize":false,"opacityDim":0.20000000000000001,"selected":{"opacity":1},"debounce":0},"shinyEvents":["plotly_hover","plotly_click","plotly_selected","plotly_relayout","plotly_brushed","plotly_brushing","plotly_clickannotation","plotly_doubleclick","plotly_deselect","plotly_afterplot","plotly_sunburstclick"],"base_url":"https://plot.ly"},"evals":[],"jsHooks":[]}</script> --- ### A Linear Model ``` r Model.HMB <- lm(TotCost ~ units, data=HMB) summary(Model.HMB) ``` ``` Call: lm(formula = TotCost ~ units, data = HMB) Residuals: Min 1Q Median 3Q Max -1606.7 -610.9 -124.8 509.8 2522.3 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 12308.642 323.601 38.04 <2e-16 *** units 21.702 0.862 25.18 <2e-16 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 Residual standard error: 871.8 on 58 degrees of freedom Multiple R-squared: 0.9162, Adjusted R-squared: 0.9147 F-statistic: 633.8 on 1 and 58 DF, p-value: < 2.2e-16 ``` `$$Total.Cost_{t} = 12308.64 + 21.70 * units_t + \epsilon_{t}$$` where `\(\epsilon_{t}\)` has a standard deviation of 872 dollars. --- ### `fitted.values()` or predictions The line is the fitted values. ``` r data.frame(units=HMB$units, TotCost = HMB$TotCost, Fitted = fitted.values(Model.HMB)) %>% ggplot() + aes(x=units, y=TotCost) + geom_point() + geom_line(aes(x=units, y=Fitted)) + scale_x_continuous(limits=c(0,600)) + scale_y_continuous(limits=c(0,25000)) ``` <img src="index_files/figure-html/unnamed-chunk-26-1.svg" width="468" /> --- ### Residual Costs? ``` r data.frame(Residual.Cost = residuals(Model.HMB)) %>% ggplot() + aes(sample=Residual.Cost) + geom_qq() + geom_qq_line() ``` <img src="index_files/figure-html/unnamed-chunk-27-1.svg" width="468" /> --- ## Not the Prettiest but the best.... ``` r car::qqPlot(residuals(Model.HMB)) ``` <img src="index_files/figure-html/unnamed-chunk-28-1.svg" width="468" /> --- ## Because normal is sustainable, I can .... - Interpret the table including `\(t\)` and `\(F\)`. - Utilize confidence intervals for the estimates. - Predict from the regression of two forms. - Subject to examinations of other characteristics of the regression. --- ### `anova` [Sums of squares] ``` r anova(Model.HMB) ``` ``` Analysis of Variance Table Response: TotCost Df Sum Sq Mean Sq F value Pr(>F) units 1 481694173 481694173 633.81 < 2.2e-16 *** Residuals 58 44080089 760002 --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` Two types of squares: those a function of units and those residual. Why?  --- ### `confint` [confidence intervals] From normal, the slope and intercept have `\(t\)` confidence intervals. ``` r confint(Model.HMB,level = 0.95) ``` ``` 2.5 % 97.5 % (Intercept) 11660.88464 12956.39966 units 19.97605 23.42705 ``` With 95% confidence, fixed costs range from 11660.88 to 12956.40 dollars per period and the variable costs range from 19.976 to 23.427 dollars per unit. If the goal is to attain 20 dollars per unit, we cannot rule that out [though a 95 percent lower bound would]. ``` r confint(Model.HMB,level = 0.9) ``` ``` 5 % 95 % (Intercept) 11767.72623 12849.55807 units 20.26066 23.14245 ``` --- ## Plots ``` r RegressionPlots(Model.HMB) ``` <div class="plotly html-widget html-fill-item" id="htmlwidget-e083190dedc4ba04be03" style="width:468px;height:432px;"></div> <script type="application/json" data-for="htmlwidget-e083190dedc4ba04be03">{"x":{"data":[{"x":[18124.657840609012,18363.374902450683,21640.309115003649,15368.560853892317,21249.681195626472,24765.332470021061,22204.549442992902,16562.146163100355,20685.440867637219,17907.642329843962,22074.340136533843,19166.332292281531,19947.588131035885,18645.495066445295,18016.150085226513,23701.956467272081,15411.963956045336,18102.956289532551,19383.347803046629,22291.355647298944,24895.541776480117,24591.720061408982,16128.115141570161,16844.266327094982,20989.262582708354,22009.235483304314,20121.200539647962,16410.235305564787,15585.576364657414,22530.07270914055,21097.770338090904,23289.626996818395,20468.425356872121,18146.359391685572,22790.491322058668,21727.115319309687,16063.010488340629,17017.878735707061,17321.700450778197,16084.71203941714,16345.130652335258,18602.091964292278,16779.161673865452,16041.308937264121,22508.371158064041,15759.188773269492,24114.285937725766,21596.906012850628,21813.921523615729,22942.402179594235,17625.522165849336,24157.689039878787,24331.301448490864,20945.859480555337,19882.483477806356,19513.557109505688,20837.351725172786,18732.301270751337,23528.344058660001,23029.208383900277],"y":[1499.6498893909873,-1572.9599824506843,-151.20234500365066,629.46588610768265,2522.2644743735286,-399.85687002105863,1520.2884370070956,366.82866689964379,52.768052362783251,-159.97940984396442,-594.12562653384339,-1606.7423222815307,-215.68072103588466,649.70543355470249,-187.19308522651383,473.4705127279201,-1053.9800060453367,421.85734046744892,704.13394695336922,9.6756927010582672,-267.57590648011842,-1182.1809614089802,-818.62832157015964,-661.29441709498292,-233.66141270835325,421.7188366956853,-930.8689896479641,1757.1217744352143,-339.51331465741407,-1201.4262591405507,-851.82837809090381,591.30512318160754,-341.76857687211862,229.68568831442872,494.23565794133106,242.31944069031186,-1068.5760083406301,-1290.9579457070615,-896.91333077819763,1325.6477605828584,1260.0751576647422,943.9023757077241,-1479.0792338654535,1136.7918327358805,-98.41980806404186,-273.58445326949254,125.7171622742359,-821.9901128506292,-178.31898361572743,-207.55700959423606,374.62011415066399,283.40135012121539,556.52771150913713,-329.09569055533439,679.29585219364606,180.94184049431254,-776.96555517278466,266.90888924866294,-277.22068866000075,748.82082609972451],"mode":"markers","showlegend":false,"text":["1","2","3","4","5","6","7","8","9","10","11","12","13","14","15","16","17","18","19","20","21","22","23","24","25","26","27","28","29","30","31","32","33","34","35","36","37","38","39","40","41","42","43","44","45","46","47","48","49","50","51","52","53","54","55","56","57","58","59","60"],"type":"scatter","marker":{"color":"rgba(31,119,180,1)","line":{"color":"rgba(31,119,180,1)"}},"error_y":{"color":"rgba(31,119,180,1)"},"error_x":{"color":"rgba(31,119,180,1)"},"line":{"color":"rgba(31,119,180,1)"},"xaxis":"x","yaxis":"y","frame":null},{"x":[15368.560853892317,15562.989035985946,15757.417218079574,15951.845400173203,16146.273582266831,16340.701764360459,16535.12994645409,16729.558128547716,16923.986310641347,17118.414492734973,17312.842674828604,17507.27085692223,17701.699039015861,17896.127221109487,18090.555403203118,18284.983585296744,18479.411767390375,18673.839949484001,18868.268131577632,19062.696313671258,19257.124495764889,19451.552677858519,19645.980859952146,19840.409042045772,20034.837224139403,20229.265406233033,20423.69358832666,20618.12177042029,20812.549952513917,21006.978134607547,21201.406316701174,21395.834498794804,21590.262680888431,21784.690862982061,21979.119045075688,22173.547227169318,22367.975409262945,22562.403591356575,22756.831773450205,22951.259955543832,23145.688137637459,23340.116319731089,23534.544501824719,23728.972683918346,23923.400866011973,24117.829048105603,24312.257230199233,24506.68541229286,24701.113594386487,24895.541776480117],"y":[-98.650092192208348,-89.717099322760546,-80.4551020462129,-71.364518053530048,-62.936629300214193,-54.431646687646619,-45.42215463618173,-36.330988271903031,-27.580982720893438,-19.594973109236488,-12.79579456301515,-7.9293207116439008,-5.2420097146602078,-4.2752470805345792,-4.569377029381279,-5.6647437813146002,-7.1016915564488716,-8.3728945287026768,-9.0785535494310565,-10.332100978411891,-13.508048800504159,-19.980909000566793,-31.125193563458463,-48.315414474038157,-71.792115418940895,-93.723033990051945,-111.62852191418409,-124.6258005654646,-131.83209131801959,-132.36461554597591,-125.34059462346015,-112.62275504171291,-100.14748483063691,-87.615506783278249,-74.430956817284368,-59.997970850301677,-46.292794787648731,-36.892382797066013,-30.511093079606248,-25.767392484312648,-21.279747860228237,-15.666626056396103,-7.5491643070799128,1.4776307589870807,9.4371834984402092,16.647735677492467,23.427529062356552,30.094805419245233,36.967806514371482,44.364774113948314],"mode":"line+lines","showlegend":false,"text":["","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","",""],"type":"scatter","name":"Smooth","line":{"color":"rgba(255,127,14,1)","width":2},"marker":{"color":"rgba(255,127,14,1)","line":{"color":"rgba(255,127,14,1)"}},"error_y":{"color":"rgba(255,127,14,1)"},"error_x":{"color":"rgba(255,127,14,1)"},"xaxis":"x","yaxis":"y","frame":null},{"x":[1.5689196324989263,-1.9599639845400538,-0.020890088246888566,0.81221780149991285,2.3939797998185104,-0.59776012604247841,1.7316643961222455,0.40791874094503472,0.10463345561407526,-0.062706777943213832,-0.64849218059285718,-2.3939797998185091,-0.23183436245300987,0.8717709721899588,-0.14674495548654856,0.59776012604247841,-1.1503493803760079,0.54852228269809822,1.0013312975256929,0.062706777943213832,-0.31863936396437514,-1.3305615131788966,-0.81221780149991285,-0.70095141958421181,-0.27497774896900473,0.50058010546673959,-1.072861341650003,1.9599639845400536,-0.50058010546673959,-1.4395314709384557,-0.93458929107348021,0.75541502636046931,-0.548522282698098,0.23183436245300987,0.64849218059285729,0.27497774896900462,-1.2354403415612518,-1.5689196324989263,-1.0013312975256929,1.4395314709384559,1.3305615131788966,1.1503493803760079,-1.7316643961222451,1.235440341561252,0.020890088246888424,-0.36291729513935606,0.14674495548654856,-0.8717709721899588,-0.10463345561407539,-0.18911842627279249,0.45376219016987962,0.36291729513935622,0.70095141958421181,-0.45376219016987929,0.93458929107347977,0.18911842627279238,-0.75541502636046931,0.31863936396437514,-0.40791874094503472,1.072861341650003],"y":[1.7408439603263621,-1.8243687375837048,-0.17543635642660865,0.7448056810156376,2.9228903841346314,-0.47430810360443798,1.7681445698239813,0.42955842390420884,0.061075027301438059,-0.18587512800435377,-0.69056667138053651,-1.8598079210384881,-0.24949032648220246,0.75289891436906076,-0.21739414995565767,0.55602948242197647,-1.2465609258592263,0.48974759264027534,0.81478550416098594,0.011257943446569442,-0.31784613711339482,-1.3997464305008782,-0.96187708752619827,-0.772850857002083,-0.27060018683084147,0.49003162247193255,-1.0768243829663942,2.059943740644878,-0.40086580788485715,-1.3996530932614717,-0.98673965259526653,0.69221028580050203,-0.39545699093372816,0.26660458083499461,0.57665426345330295,0.2812473596310226,-1.2562485162523815,-1.5070354434778332,-1.045142245769509,1.5581838579974101,1.477981142324025,1.0939566150630557,-1.7293519559259842,1.336691378545448,-0.1146446764368184,-0.32249715473584584,0.14816597814523177,-0.95358792587744878,-0.20703508505373411,-0.24240018382919526,0.43583087975317436,0.33414037823502329,0.65724261216085,-0.38108563707987442,0.78578411909290935,0.20934735565595325,-0.89951411456929109,0.30923080530443209,-0.32510939029617275,0.87502434614437552],"text":["1","2","3","4","5","6","7","8","9","10","11","12","13","14","15","16","17","18","19","20","21","22","23","24","25","26","27","28","29","30","31","32","33","34","35","36","37","38","39","40","41","42","43","44","45","46","47","48","49","50","51","52","53","54","55","56","57","58","59","60"],"mode":"markers","marker":{"color":"rgba(44,160,44,1)","size":10,"opacity":0.5,"line":{"color":"rgba(44,160,44,1)"}},"showlegend":false,"type":"scatter","error_y":{"color":"rgba(44,160,44,1)"},"error_x":{"color":"rgba(44,160,44,1)"},"line":{"color":"rgba(44,160,44,1)"},"xaxis":"x2","yaxis":"y2","frame":null},{"x":[1.5689196324989263,-1.9599639845400538,-0.020890088246888566,0.81221780149991285,2.3939797998185104,-0.59776012604247841,1.7316643961222455,0.40791874094503472,0.10463345561407526,-0.062706777943213832,-0.64849218059285718,-2.3939797998185091,-0.23183436245300987,0.8717709721899588,-0.14674495548654856,0.59776012604247841,-1.1503493803760079,0.54852228269809822,1.0013312975256929,0.062706777943213832,-0.31863936396437514,-1.3305615131788966,-0.81221780149991285,-0.70095141958421181,-0.27497774896900473,0.50058010546673959,-1.072861341650003,1.9599639845400536,-0.50058010546673959,-1.4395314709384557,-0.93458929107348021,0.75541502636046931,-0.548522282698098,0.23183436245300987,0.64849218059285729,0.27497774896900462,-1.2354403415612518,-1.5689196324989263,-1.0013312975256929,1.4395314709384559,1.3305615131788966,1.1503493803760079,-1.7316643961222451,1.235440341561252,0.020890088246888424,-0.36291729513935606,0.14674495548654856,-0.8717709721899588,-0.10463345561407539,-0.18911842627279249,0.45376219016987962,0.36291729513935622,0.70095141958421181,-0.45376219016987929,0.93458929107347977,0.18911842627279238,-0.75541502636046931,0.31863936396437514,-0.40791874094503472,1.072861341650003],"y":[1.5689196324989263,-1.9599639845400538,-0.020890088246888566,0.81221780149991285,2.3939797998185104,-0.59776012604247841,1.7316643961222455,0.40791874094503472,0.10463345561407526,-0.062706777943213832,-0.64849218059285718,-2.3939797998185091,-0.23183436245300987,0.8717709721899588,-0.14674495548654856,0.59776012604247841,-1.1503493803760079,0.54852228269809822,1.0013312975256929,0.062706777943213832,-0.31863936396437514,-1.3305615131788966,-0.81221780149991285,-0.70095141958421181,-0.27497774896900473,0.50058010546673959,-1.072861341650003,1.9599639845400536,-0.50058010546673959,-1.4395314709384557,-0.93458929107348021,0.75541502636046931,-0.548522282698098,0.23183436245300987,0.64849218059285729,0.27497774896900462,-1.2354403415612518,-1.5689196324989263,-1.0013312975256929,1.4395314709384559,1.3305615131788966,1.1503493803760079,-1.7316643961222451,1.235440341561252,0.020890088246888424,-0.36291729513935606,0.14674495548654856,-0.8717709721899588,-0.10463345561407539,-0.18911842627279249,0.45376219016987962,0.36291729513935622,0.70095141958421181,-0.45376219016987929,0.93458929107347977,0.18911842627279238,-0.75541502636046931,0.31863936396437514,-0.40791874094503472,1.072861341650003],"text":["1","2","3","4","5","6","7","8","9","10","11","12","13","14","15","16","17","18","19","20","21","22","23","24","25","26","27","28","29","30","31","32","33","34","35","36","37","38","39","40","41","42","43","44","45","46","47","48","49","50","51","52","53","54","55","56","57","58","59","60"],"mode":"line+markers+lines","marker":{"color":"rgba(214,39,40,1)","size":10,"opacity":0.5,"line":{"color":"rgba(214,39,40,1)"}},"showlegend":false,"type":"scatter","line":{"color":"rgba(214,39,40,1)","width":2},"error_y":{"color":"rgba(214,39,40,1)"},"error_x":{"color":"rgba(214,39,40,1)"},"xaxis":"x2","yaxis":"y2","frame":null},{"x":[18124.657840609012,18363.374902450683,21640.309115003649,15368.560853892317,21249.681195626472,24765.332470021061,22204.549442992902,16562.146163100355,20685.440867637219,17907.642329843962,22074.340136533843,19166.332292281531,19947.588131035885,18645.495066445295,18016.150085226513,23701.956467272081,15411.963956045336,18102.956289532551,19383.347803046629,22291.355647298944,24895.541776480117,24591.720061408982,16128.115141570161,16844.266327094982,20989.262582708354,22009.235483304314,20121.200539647962,16410.235305564787,15585.576364657414,22530.07270914055,21097.770338090904,23289.626996818395,20468.425356872121,18146.359391685572,22790.491322058668,21727.115319309687,16063.010488340629,17017.878735707061,17321.700450778197,16084.71203941714,16345.130652335258,18602.091964292278,16779.161673865452,16041.308937264121,22508.371158064041,15759.188773269492,24114.285937725766,21596.906012850628,21813.921523615729,22942.402179594235,17625.522165849336,24157.689039878787,24331.301448490864,20945.859480555337,19882.483477806356,19513.557109505688,20837.351725172786,18732.301270751337,23528.344058660001,23029.208383900277],"y":[1.3194104593819023,1.3506919477007719,0.41885123424267079,0.86302125177520261,1.7096462745651895,0.68870030027903861,1.329715973365734,0.65540706732854881,0.24713362236134132,0.43113237874735616,0.83100341237598807,1.3637477483165603,0.49949006644997701,0.86769747860015167,0.46625545568460397,0.74567384453390639,1.116494928720783,0.6998196858050475,0.90265469818806454,0.10610345633658426,0.56377844683296896,1.1831087990970561,0.98075332654352909,0.87911936447906947,0.52019245172420703,0.70002258711553911,1.0377014902978574,1.4352504104318793,0.63313964327378613,1.1830693526845633,0.99334769974831394,0.83199175825274974,0.62885371187083583,0.51633766164690587,0.75937755000612372,0.53032759652032313,1.1208249266733772,1.2276137191632526,1.0223219873256708,1.2482723492881711,1.2157224775104001,1.0459238093967724,1.3150482713292255,1.1561537002256439,0.33859219783807543,0.56788832945909873,0.38492334060853178,0.97651826704749811,0.45501108234166571,0.49234153169237638,0.6601748857334504,0.57804876804212924,0.81070500933499234,0.61732134021097507,0.88644465089079838,0.45754492200870645,0.94842717937082077,0.55608525003315101,0.57018364611427852,0.93542736016452688],"text":["1","2","3","4","5","6","7","8","9","10","11","12","13","14","15","16","17","18","19","20","21","22","23","24","25","26","27","28","29","30","31","32","33","34","35","36","37","38","39","40","41","42","43","44","45","46","47","48","49","50","51","52","53","54","55","56","57","58","59","60"],"mode":"markers","marker":{"color":"rgba(148,103,189,1)","size":10,"opacity":0.5,"line":{"color":"rgba(148,103,189,1)"}},"showlegend":false,"type":"scatter","error_y":{"color":"rgba(148,103,189,1)"},"error_x":{"color":"rgba(148,103,189,1)"},"line":{"color":"rgba(148,103,189,1)"},"xaxis":"x3","yaxis":"y3","frame":null},{"x":[15368.560853892317,15562.989035985946,15757.417218079574,15951.845400173203,16146.273582266831,16340.701764360459,16535.12994645409,16729.558128547716,16923.986310641347,17118.414492734973,17312.842674828604,17507.27085692223,17701.699039015861,17896.127221109487,18090.555403203118,18284.983585296744,18479.411767390375,18673.839949484001,18868.268131577632,19062.696313671258,19257.124495764889,19451.552677858519,19645.980859952146,19840.409042045772,20034.837224139403,20229.265406233033,20423.69358832666,20618.12177042029,20812.549952513917,21006.978134607547,21201.406316701174,21395.834498794804,21590.262680888431,21784.690862982061,21979.119045075688,22173.547227169318,22367.975409262945,22562.403591356575,22756.831773450205,22951.259955543832,23145.688137637459,23340.116319731089,23534.544501824719,23728.972683918346,23923.400866011973,24117.829048105603,24312.257230199233,24506.68541229286,24701.113594386487,24895.541776480117],"y":[1.0388687791478377,1.0278523166907649,1.0168580248230832,1.0058073411886066,0.99462571895643204,0.98373327458311333,0.9732581126178812,0.9629226881863675,0.95244945641420231,0.94156087242701691,0.92997939135044183,0.91783490324339301,0.90548890328621845,0.89290483511610319,0.8800452971373951,0.86687288775444271,0.85335020537159367,0.83955833486106068,0.82670083986227405,0.81498413649309454,0.80426093274419652,0.79438393660625473,0.78520585606994375,0.77657939912593843,0.76834243739674224,0.76033343004538589,0.75264289889280267,0.74539763572237283,0.73872443231747598,0.73275008046149259,0.72760137193780272,0.72333731315201855,0.71978462565967116,0.71680080419855874,0.71424801997815246,0.71198844420792406,0.71003373754366106,0.70852157347646538,0.70733220021973597,0.70634054037338345,0.70542151653731877,0.70445005131145233,0.70330134583933168,0.70215294773804515,0.70119271962909324,0.70036224306488748,0.69960309959784028,0.69885687078036351,0.69806513816486948,0.69716948330377015],"text":["","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","",""],"mode":"line+markers+lines","marker":{"color":"rgba(140,86,75,1)","size":10,"opacity":0.5,"line":{"color":"rgba(140,86,75,1)"}},"showlegend":false,"type":"scatter","name":"Smooth","line":{"color":"rgba(140,86,75,1)","width":2},"error_y":{"color":"rgba(140,86,75,1)"},"error_x":{"color":"rgba(140,86,75,1)"},"xaxis":"x3","yaxis":"y3","frame":null},{"x":[0.023559915940385555,0.021872127365138071,0.022620140869793179,0.060181551663935712,0.020190335412618807,0.064866608712349783,0.027248360363653454,0.040450055661043247,0.01779911691908646,0.025299588338367776,0.02606298448393423,0.017931433703196761,0.016666667753011361,0.020182513730799479,0.024405309383691228,0.045939833407302538,0.059360405434269875,0.023725083788136966,0.017325905169017088,0.028077719359229578,0.067506817410464076,0.061455833993667962,0.046940747957449271,0.036650543355940819,0.018922440789705675,0.025496694720214853,0.016729762653020609,0.04263282633409049,0.056154037333799853,0.030519778784587559,0.019416510357959917,0.039864081331411894,0.017231393180366872,0.02339670351308883,0.033453691635017643,0.02324613613806676,0.047981813807601879,0.034476637255622139,0.030973436330108604,0.047632836437096177,0.043597630786504651,0.02042094466492534,0.037498022581065049,0.048332746598562411,0.030287996280008129,0.053072816142439083,0.052721492267387586,0.022318875758385377,0.023903418133617661,0.035295176258014857,0.027853497813742155,0.053476414924316429,0.056574322370225104,0.018738500905587801,0.016675271603012621,0.017056448230341224,0.018312871053252678,0.019729116908005741,0.043295583506914925,0.036390472435448157],"y":[1499.6498893909873,-1572.9599824506843,-151.20234500365066,629.46588610768265,2522.2644743735286,-399.85687002105863,1520.2884370070956,366.82866689964379,52.768052362783251,-159.97940984396442,-594.12562653384339,-1606.7423222815307,-215.68072103588466,649.70543355470249,-187.19308522651383,473.4705127279201,-1053.9800060453367,421.85734046744892,704.13394695336922,9.6756927010582672,-267.57590648011842,-1182.1809614089802,-818.62832157015964,-661.29441709498292,-233.66141270835325,421.7188366956853,-930.8689896479641,1757.1217744352143,-339.51331465741407,-1201.4262591405507,-851.82837809090381,591.30512318160754,-341.76857687211862,229.68568831442872,494.23565794133106,242.31944069031186,-1068.5760083406301,-1290.9579457070615,-896.91333077819763,1325.6477605828584,1260.0751576647422,943.9023757077241,-1479.0792338654535,1136.7918327358805,-98.41980806404186,-273.58445326949254,125.7171622742359,-821.9901128506292,-178.31898361572743,-207.55700959423606,374.62011415066399,283.40135012121539,556.52771150913713,-329.09569055533439,679.29585219364606,180.94184049431254,-776.96555517278466,266.90888924866294,-277.22068866000075,748.82082609972451],"text":["1","2","3","4","5","6","7","8","9","10","11","12","13","14","15","16","17","18","19","20","21","22","23","24","25","26","27","28","29","30","31","32","33","34","35","36","37","38","39","40","41","42","43","44","45","46","47","48","49","50","51","52","53","54","55","56","57","58","59","60"],"mode":"markers","marker":{"color":"rgba(227,119,194,1)","size":10,"opacity":0.5,"line":{"color":"rgba(227,119,194,1)"}},"showlegend":false,"type":"scatter","error_y":{"color":"rgba(227,119,194,1)"},"error_x":{"color":"rgba(227,119,194,1)"},"line":{"color":"rgba(227,119,194,1)"},"xaxis":"x4","yaxis":"y4","frame":null},{"x":[0.016666667753011361,0.017704221827653254,0.018741775902295147,0.019779329976937036,0.020816884051578929,0.021854438126220822,0.022891992200862715,0.023929546275504608,0.024967100350146501,0.026004654424788394,0.027042208499430283,0.028079762574072176,0.029117316648714069,0.030154870723355959,0.031192424797997852,0.032229978872639745,0.033267532947281638,0.03430508702192353,0.035342641096565423,0.036380195171207316,0.037417749245849202,0.038455303320491102,0.039492857395132988,0.040530411469774888,0.041567965544416774,0.042605519619058667,0.04364307369370056,0.044680627768342453,0.045718181842984346,0.046755735917626239,0.047793289992268131,0.048830844066910017,0.049868398141551917,0.050905952216193803,0.051943506290835703,0.052981060365477589,0.054018614440119489,0.055056168514761375,0.056093722589403261,0.057131276664045161,0.058168830738687047,0.059206384813328947,0.060243938887970833,0.061281492962612732,0.062319047037254618,0.063356601111896518,0.064394155186538404,0.065431709261180304,0.06646926333582219,0.067506817410464076],"y":[-122.22824016412525,-104.48198432637575,-90.649742234246503,-76.880161952441355,-66.577062987176475,-58.457386943892885,-52.197542720797365,-47.823993417702468,-47.549752471401632,-54.368830932317948,-70.736643610560421,-98.273028192746764,-116.14248744058821,-117.04304836614132,-104.52698020405339,-82.14655218897164,-53.454033555543404,-22.001693538415928,8.6581986277634542,34.973373708347516,52.92619849776402,62.70282995518663,67.147742971596784,69.105563619904629,71.420917973020181,76.93843210385343,88.482971988253055,97.558773859111312,96.767675441822263,87.981713201129992,73.072923601778385,53.91334310851164,32.375008186073302,10.329955299207942,-10.349779087341027,-27.792158508829075,-41.329279277397774,-56.309866394573518,-73.388695653373659,-92.275584304341947,-112.68034959802146,-134.31280878495627,-156.88277911568932,-180.1000778407647,-203.67452221072534,-227.31592947611551,-250.73411688747794,-273.63890169535688,-295.74010115029529,-316.74753250283686],"text":["","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","","",""],"mode":"line+markers+lines","marker":{"color":"rgba(127,127,127,1)","size":10,"opacity":0.5,"line":{"color":"rgba(127,127,127,1)"}},"showlegend":false,"type":"scatter","name":"Smooth","line":{"color":"rgba(127,127,127,1)","width":2},"error_y":{"color":"rgba(127,127,127,1)"},"error_x":{"color":"rgba(127,127,127,1)"},"xaxis":"x4","yaxis":"y4","frame":null}],"layout":{"xaxis":{"domain":[0,0.47999999999999998],"automargin":true,"text":"Residuals","anchor":"y"},"xaxis2":{"domain":[0.52000000000000002,1],"automargin":true,"text":"Theoretical","anchor":"y2"},"xaxis3":{"domain":[0,0.47999999999999998],"automargin":true,"text":"Scale","anchor":"y3"},"xaxis4":{"domain":[0.52000000000000002,1],"automargin":true,"text":"Leverage","anchor":"y4"},"yaxis4":{"domain":[0,0.47999999999999998],"automargin":true,"text":"Residuals","anchor":"x4"},"yaxis3":{"domain":[0,0.47999999999999998],"automargin":true,"text":"Location","anchor":"x3"},"yaxis2":{"domain":[0.52000000000000002,1],"automargin":true,"text":"Standardized Residuals","anchor":"x2"},"yaxis":{"domain":[0.52000000000000002,1],"automargin":true,"text":"Fitted Values","anchor":"x"},"annotations":[],"shapes":[],"images":[],"margin":{"b":40,"l":60,"t":25,"r":10},"plot_bgcolor":"#e6e6e6","hovermode":"closest","showlegend":false},"attrs":{"ec01825554":{"x":{},"y":{},"mode":"markers","showlegend":false,"text":{},"alpha_stroke":1,"sizes":[10,100],"spans":[1,20],"type":"scatter"},"ec01825554.1":{"x":[15368.560853892317,15562.989035985946,15757.417218079574,15951.845400173203,16146.273582266831,16340.701764360459,16535.12994645409,16729.558128547716,16923.986310641347,17118.414492734973,17312.842674828604,17507.27085692223,17701.699039015861,17896.127221109487,18090.555403203118,18284.983585296744,18479.411767390375,18673.839949484001,18868.268131577632,19062.696313671258,19257.124495764889,19451.552677858519,19645.980859952146,19840.409042045772,20034.837224139403,20229.265406233033,20423.69358832666,20618.12177042029,20812.549952513917,21006.978134607547,21201.406316701174,21395.834498794804,21590.262680888431,21784.690862982061,21979.119045075688,22173.547227169318,22367.975409262945,22562.403591356575,22756.831773450205,22951.259955543832,23145.688137637459,23340.116319731089,23534.544501824719,23728.972683918346,23923.400866011973,24117.829048105603,24312.257230199233,24506.68541229286,24701.113594386487,24895.541776480117],"y":[-98.650092192208348,-89.717099322760546,-80.4551020462129,-71.364518053530048,-62.936629300214193,-54.431646687646619,-45.42215463618173,-36.330988271903031,-27.580982720893438,-19.594973109236488,-12.79579456301515,-7.9293207116439008,-5.2420097146602078,-4.2752470805345792,-4.569377029381279,-5.6647437813146002,-7.1016915564488716,-8.3728945287026768,-9.0785535494310565,-10.332100978411891,-13.508048800504159,-19.980909000566793,-31.125193563458463,-48.315414474038157,-71.792115418940895,-93.723033990051945,-111.62852191418409,-124.6258005654646,-131.83209131801959,-132.36461554597591,-125.34059462346015,-112.62275504171291,-100.14748483063691,-87.615506783278249,-74.430956817284368,-59.997970850301677,-46.292794787648731,-36.892382797066013,-30.511093079606248,-25.767392484312648,-21.279747860228237,-15.666626056396103,-7.5491643070799128,1.4776307589870807,9.4371834984402092,16.647735677492467,23.427529062356552,30.094805419245233,36.967806514371482,44.364774113948314],"mode":"line","showlegend":false,"text":"","alpha_stroke":1,"sizes":[10,100],"spans":[1,20],"type":"scatter","name":"Smooth","line":{"width":2},"inherit":true},"ec016caffe0e":{"x":{},"y":{},"text":{},"mode":"markers","marker":{"size":10,"opacity":0.5},"showlegend":false,"alpha_stroke":1,"sizes":[10,100],"spans":[1,20],"type":"scatter"},"ec016caffe0e.1":{"x":[1.5689196324989263,-1.9599639845400538,-0.020890088246888566,0.81221780149991285,2.3939797998185104,-0.59776012604247841,1.7316643961222455,0.40791874094503472,0.10463345561407526,-0.062706777943213832,-0.64849218059285718,-2.3939797998185091,-0.23183436245300987,0.8717709721899588,-0.14674495548654856,0.59776012604247841,-1.1503493803760079,0.54852228269809822,1.0013312975256929,0.062706777943213832,-0.31863936396437514,-1.3305615131788966,-0.81221780149991285,-0.70095141958421181,-0.27497774896900473,0.50058010546673959,-1.072861341650003,1.9599639845400536,-0.50058010546673959,-1.4395314709384557,-0.93458929107348021,0.75541502636046931,-0.548522282698098,0.23183436245300987,0.64849218059285729,0.27497774896900462,-1.2354403415612518,-1.5689196324989263,-1.0013312975256929,1.4395314709384559,1.3305615131788966,1.1503493803760079,-1.7316643961222451,1.235440341561252,0.020890088246888424,-0.36291729513935606,0.14674495548654856,-0.8717709721899588,-0.10463345561407539,-0.18911842627279249,0.45376219016987962,0.36291729513935622,0.70095141958421181,-0.45376219016987929,0.93458929107347977,0.18911842627279238,-0.75541502636046931,0.31863936396437514,-0.40791874094503472,1.072861341650003],"y":[1.5689196324989263,-1.9599639845400538,-0.020890088246888566,0.81221780149991285,2.3939797998185104,-0.59776012604247841,1.7316643961222455,0.40791874094503472,0.10463345561407526,-0.062706777943213832,-0.64849218059285718,-2.3939797998185091,-0.23183436245300987,0.8717709721899588,-0.14674495548654856,0.59776012604247841,-1.1503493803760079,0.54852228269809822,1.0013312975256929,0.062706777943213832,-0.31863936396437514,-1.3305615131788966,-0.81221780149991285,-0.70095141958421181,-0.27497774896900473,0.50058010546673959,-1.072861341650003,1.9599639845400536,-0.50058010546673959,-1.4395314709384557,-0.93458929107348021,0.75541502636046931,-0.548522282698098,0.23183436245300987,0.64849218059285729,0.27497774896900462,-1.2354403415612518,-1.5689196324989263,-1.0013312975256929,1.4395314709384559,1.3305615131788966,1.1503493803760079,-1.7316643961222451,1.235440341561252,0.020890088246888424,-0.36291729513935606,0.14674495548654856,-0.8717709721899588,-0.10463345561407539,-0.18911842627279249,0.45376219016987962,0.36291729513935622,0.70095141958421181,-0.45376219016987929,0.93458929107347977,0.18911842627279238,-0.75541502636046931,0.31863936396437514,-0.40791874094503472,1.072861341650003],"text":{},"mode":"line","marker":{"size":10,"opacity":0.5},"showlegend":false,"alpha_stroke":1,"sizes":[10,100],"spans":[1,20],"type":"scatter","name":"","line":{"width":2},"inherit":true},"ec01165080e1":{"x":{},"y":{},"text":{},"mode":"markers","marker":{"size":10,"opacity":0.5},"showlegend":false,"alpha_stroke":1,"sizes":[10,100],"spans":[1,20],"type":"scatter"},"ec01165080e1.1":{"x":[15368.560853892317,15562.989035985946,15757.417218079574,15951.845400173203,16146.273582266831,16340.701764360459,16535.12994645409,16729.558128547716,16923.986310641347,17118.414492734973,17312.842674828604,17507.27085692223,17701.699039015861,17896.127221109487,18090.555403203118,18284.983585296744,18479.411767390375,18673.839949484001,18868.268131577632,19062.696313671258,19257.124495764889,19451.552677858519,19645.980859952146,19840.409042045772,20034.837224139403,20229.265406233033,20423.69358832666,20618.12177042029,20812.549952513917,21006.978134607547,21201.406316701174,21395.834498794804,21590.262680888431,21784.690862982061,21979.119045075688,22173.547227169318,22367.975409262945,22562.403591356575,22756.831773450205,22951.259955543832,23145.688137637459,23340.116319731089,23534.544501824719,23728.972683918346,23923.400866011973,24117.829048105603,24312.257230199233,24506.68541229286,24701.113594386487,24895.541776480117],"y":[1.0388687791478377,1.0278523166907649,1.0168580248230832,1.0058073411886066,0.99462571895643204,0.98373327458311333,0.9732581126178812,0.9629226881863675,0.95244945641420231,0.94156087242701691,0.92997939135044183,0.91783490324339301,0.90548890328621845,0.89290483511610319,0.8800452971373951,0.86687288775444271,0.85335020537159367,0.83955833486106068,0.82670083986227405,0.81498413649309454,0.80426093274419652,0.79438393660625473,0.78520585606994375,0.77657939912593843,0.76834243739674224,0.76033343004538589,0.75264289889280267,0.74539763572237283,0.73872443231747598,0.73275008046149259,0.72760137193780272,0.72333731315201855,0.71978462565967116,0.71680080419855874,0.71424801997815246,0.71198844420792406,0.71003373754366106,0.70852157347646538,0.70733220021973597,0.70634054037338345,0.70542151653731877,0.70445005131145233,0.70330134583933168,0.70215294773804515,0.70119271962909324,0.70036224306488748,0.69960309959784028,0.69885687078036351,0.69806513816486948,0.69716948330377015],"text":"","mode":"line","marker":{"size":10,"opacity":0.5},"showlegend":false,"alpha_stroke":1,"sizes":[10,100],"spans":[1,20],"type":"scatter","name":"Smooth","line":{"width":2},"inherit":true},"ec017f3d3f38":{"x":{},"y":{},"text":{},"mode":"markers","marker":{"size":10,"opacity":0.5},"showlegend":false,"alpha_stroke":1,"sizes":[10,100],"spans":[1,20],"type":"scatter"},"ec017f3d3f38.1":{"x":[0.016666667753011361,0.017704221827653254,0.018741775902295147,0.019779329976937036,0.020816884051578929,0.021854438126220822,0.022891992200862715,0.023929546275504608,0.024967100350146501,0.026004654424788394,0.027042208499430283,0.028079762574072176,0.029117316648714069,0.030154870723355959,0.031192424797997852,0.032229978872639745,0.033267532947281638,0.03430508702192353,0.035342641096565423,0.036380195171207316,0.037417749245849202,0.038455303320491102,0.039492857395132988,0.040530411469774888,0.041567965544416774,0.042605519619058667,0.04364307369370056,0.044680627768342453,0.045718181842984346,0.046755735917626239,0.047793289992268131,0.048830844066910017,0.049868398141551917,0.050905952216193803,0.051943506290835703,0.052981060365477589,0.054018614440119489,0.055056168514761375,0.056093722589403261,0.057131276664045161,0.058168830738687047,0.059206384813328947,0.060243938887970833,0.061281492962612732,0.062319047037254618,0.063356601111896518,0.064394155186538404,0.065431709261180304,0.06646926333582219,0.067506817410464076],"y":[-122.22824016412525,-104.48198432637575,-90.649742234246503,-76.880161952441355,-66.577062987176475,-58.457386943892885,-52.197542720797365,-47.823993417702468,-47.549752471401632,-54.368830932317948,-70.736643610560421,-98.273028192746764,-116.14248744058821,-117.04304836614132,-104.52698020405339,-82.14655218897164,-53.454033555543404,-22.001693538415928,8.6581986277634542,34.973373708347516,52.92619849776402,62.70282995518663,67.147742971596784,69.105563619904629,71.420917973020181,76.93843210385343,88.482971988253055,97.558773859111312,96.767675441822263,87.981713201129992,73.072923601778385,53.91334310851164,32.375008186073302,10.329955299207942,-10.349779087341027,-27.792158508829075,-41.329279277397774,-56.309866394573518,-73.388695653373659,-92.275584304341947,-112.68034959802146,-134.31280878495627,-156.88277911568932,-180.1000778407647,-203.67452221072534,-227.31592947611551,-250.73411688747794,-273.63890169535688,-295.74010115029529,-316.74753250283686],"text":"","mode":"line","marker":{"size":10,"opacity":0.5},"showlegend":false,"alpha_stroke":1,"sizes":[10,100],"spans":[1,20],"type":"scatter","name":"Smooth","line":{"width":2},"inherit":true}},"source":"A","config":{"modeBarButtonsToAdd":["hoverclosest","hovercompare"],"showSendToCloud":false},"highlight":{"on":"plotly_click","persistent":false,"dynamic":false,"selectize":false,"opacityDim":0.20000000000000001,"selected":{"opacity":1},"debounce":0},"subplot":true,"shinyEvents":["plotly_hover","plotly_click","plotly_selected","plotly_relayout","plotly_brushed","plotly_brushing","plotly_clickannotation","plotly_doubleclick","plotly_deselect","plotly_afterplot","plotly_sunburstclick"],"base_url":"https://plot.ly"},"evals":[],"jsHooks":[]}</script> --- ### Predicting Costs ### Average Costs `interval="confidence"` ``` r predict(Model.HMB, newdata=data.frame(units=c(200,250,300)), interval="confidence") ``` ``` fit lwr upr 1 16648.95 16303.25 16994.66 2 17734.03 17448.18 18019.88 3 18819.11 18576.63 19061.58 ``` ### All Costs `interval="prediction"` ``` r predict(Model.HMB, newdata=data.frame(units=c(200,250,300)), interval="prediction") ``` ``` fit lwr upr 1 16648.95 14869.98 18427.92 2 17734.03 15965.71 19502.35 3 18819.11 17057.28 20580.93 ``` --- ### Diminshing Marginal Cost? ``` r Semiconductors %>% ggplot() + aes(x=units, y=TotCost4) + geom_point() + geom_smooth(method = "lm") + geom_smooth(method = "loess", color="red") + theme_xaringan() + labs(x="Units", y="Total Cost", title="Semiconductors") ``` <img src="index_files/figure-html/unnamed-chunk-35-1.svg" width="468" /> --- ## Residuals ``` r SClm <- lm(TotCost4~units, data=Semiconductors) SClm2 <- lm(TotCost4~poly(units, 2, raw=TRUE), data=Semiconductors) par(mfrow=c(2,2)) plot(SClm) ``` <img src="index_files/figure-html/unnamed-chunk-36-1.svg" width="468" /> --- ## Residuals ``` r resid.plotter(SClm2) ``` <img src="index_files/figure-html/unnamed-chunk-37-1.svg" width="468" /> --- ## Comparing Squares ``` r anova(SClm,SClm2) ``` ``` Analysis of Variance Table Model 1: TotCost4 ~ units Model 2: TotCost4 ~ poly(units, 2, raw = TRUE) Res.Df RSS Df Sum of Sq F Pr(>F) 1 58 304149603 2 57 203027172 1 101122431 28.39 0.000001757 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` --- ## Line*s*? ``` r PPlm <- lm(TotCost3 ~ units:PlantFac, data=PowerPlant) PowerPlant %>% ggplot() + aes(x=units, y=TotCost3, color=PlantFac) + geom_point() + geom_smooth(method = "lm") + theme_xaringan() + labs(x="Units", y="Total Cost", title="Power Plant") ``` <img src="index_files/figure-html/unnamed-chunk-39-1.svg" width="468" /> --- ## Comparison `test` or `anova` ``` r PPlm1 <- lm(TotCost3~units, data=PowerPlant) PPlm2 <- lm(TotCost3~PlantFac*units, data=PowerPlant) anova(PPlm1,PPlm) ``` ``` Analysis of Variance Table Model 1: TotCost3 ~ units Model 2: TotCost3 ~ units:PlantFac Res.Df RSS Df Sum of Sq F Pr(>F) 1 58 585943761 2 56 348638405 2 237305356 19.059 0.0000004859 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` --- ``` r anova(PPlm,PPlm2) ``` ``` Analysis of Variance Table Model 1: TotCost3 ~ units:PlantFac Model 2: TotCost3 ~ PlantFac * units Res.Df RSS Df Sum of Sq F Pr(>F) 1 56 348638405 2 54 345221354 2 3417052 0.2672 0.7665 ``` --- ## The Premier League ``` r Model.EPL <- lm(Points ~ Wage.Bill.milGBP, data=EPL) summary(Model.EPL) ``` ``` Call: lm(formula = Points ~ Wage.Bill.milGBP, data = EPL) Residuals: Min 1Q Median 3Q Max -12.569 -3.201 0.353 3.712 11.129 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 32.11589 2.74905 11.683 0.000000000776 *** Wage.Bill.milGBP 0.24023 0.02987 8.042 0.000000227415 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 Residual standard error: 6.438 on 18 degrees of freedom Multiple R-squared: 0.7823, Adjusted R-squared: 0.7702 F-statistic: 64.67 on 1 and 18 DF, p-value: 0.0000002274 ``` `$$Points = 32.12 + 0.24*Wage.Bill + \epsilon$$` --- ``` r EPL$FV <- fitted.values(Model.EPL) PP <- ggplot(EPL) + aes(x=Wage.Bill.milGBP, y=Points, text=Team) + geom_point() + geom_smooth(method="lm", color="black") PP ``` <img src="index_files/figure-html/unnamed-chunk-43-1.svg" width="468" /> --- ``` r plotly::ggplotly(PP) ``` <div class="plotly html-widget html-fill-item" id="htmlwidget-0c6217bcb9e75787b912" style="width:468px;height:432px;"></div> <script type="application/json" data-for="htmlwidget-0c6217bcb9e75787b912">{"x":{"data":[{"x":[124,83,38,50,25,56,190,58,58,135,174,153,54,47,61,91,37,56,40,38],"y":[68,48,39,43,39,46,71,54,49,58,71,80,46,46,47,62,47,33,42,30],"text":["Wage.Bill.milGBP: 124<br />Points: 68<br />Arsenal","Wage.Bill.milGBP: 83<br />Points: 48<br />Aston Villa","Wage.Bill.milGBP: 38<br />Points: 39<br />Birmingham City","Wage.Bill.milGBP: 50<br />Points: 43<br />Blackburn Rovers","Wage.Bill.milGBP: 25<br />Points: 39<br />Blackpool","Wage.Bill.milGBP: 56<br />Points: 46<br />Bolton Wanderers","Wage.Bill.milGBP: 190<br />Points: 71<br />Chelsea","Wage.Bill.milGBP: 58<br />Points: 54<br />Everton","Wage.Bill.milGBP: 58<br />Points: 49<br />Fulham","Wage.Bill.milGBP: 135<br />Points: 58<br />Liverpool","Wage.Bill.milGBP: 174<br />Points: 71<br />Manchester City","Wage.Bill.milGBP: 153<br />Points: 80<br />Manchester United","Wage.Bill.milGBP: 54<br />Points: 46<br />Newcastle United","Wage.Bill.milGBP: 47<br />Points: 46<br />Stoke City","Wage.Bill.milGBP: 61<br />Points: 47<br />Sunderland","Wage.Bill.milGBP: 91<br />Points: 62<br />Tottenham Hotspur","Wage.Bill.milGBP: 37<br />Points: 47<br />West Bromwich Albion","Wage.Bill.milGBP: 56<br />Points: 33<br />West Ham United","Wage.Bill.milGBP: 40<br />Points: 42<br />Wigan Athletic","Wage.Bill.milGBP: 38<br />Points: 30<br />Wolverhampton Wanderers"],"type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(55,17,66,1)","opacity":1,"size":5.6692913385826778,"symbol":"circle","line":{"width":1.8897637795275593,"color":"rgba(55,17,66,1)"}},"hoveron":"points","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","frame":null},{"visible":false,"showlegend":false,"xaxis":null,"yaxis":null,"hoverinfo":"text","frame":null}],"layout":{"margin":{"t":23.914764079147645,"r":7.3059360730593621,"b":37.869101978691035,"l":37.260273972602747},"plot_bgcolor":"rgba(235,235,235,1)","paper_bgcolor":"rgba(255,255,255,1)","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724},"xaxis":{"domain":[0,1],"automargin":true,"type":"linear","autorange":false,"range":[16.75,198.25],"tickmode":"array","ticktext":["50","100","150"],"tickvals":[50,100,150],"categoryorder":"array","categoryarray":["50","100","150"],"nticks":null,"ticks":"outside","tickcolor":"rgba(51,51,51,1)","ticklen":3.6529680365296811,"tickwidth":0.66417600664176002,"showticklabels":true,"tickfont":{"color":"rgba(77,77,77,1)","family":"","size":11.68949771689498},"tickangle":-0,"showline":false,"linecolor":null,"linewidth":0,"showgrid":true,"gridcolor":"rgba(255,255,255,1)","gridwidth":0.66417600664176002,"zeroline":false,"anchor":"y","title":{"text":"Wage.Bill.milGBP","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724}},"hoverformat":".2f"},"yaxis":{"domain":[0,1],"automargin":true,"type":"linear","autorange":false,"range":[27.5,82.5],"tickmode":"array","ticktext":["30","40","50","60","70","80"],"tickvals":[30,40,50,60,70,80],"categoryorder":"array","categoryarray":["30","40","50","60","70","80"],"nticks":null,"ticks":"outside","tickcolor":"rgba(51,51,51,1)","ticklen":3.6529680365296811,"tickwidth":0.66417600664176002,"showticklabels":true,"tickfont":{"color":"rgba(77,77,77,1)","family":"","size":11.68949771689498},"tickangle":-0,"showline":false,"linecolor":null,"linewidth":0,"showgrid":true,"gridcolor":"rgba(255,255,255,1)","gridwidth":0.66417600664176002,"zeroline":false,"anchor":"x","title":{"text":"Points","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724}},"hoverformat":".2f"},"shapes":[{"type":"rect","fillcolor":null,"line":{"color":null,"width":0,"linetype":[]},"yref":"paper","xref":"paper","x0":0,"x1":1,"y0":0,"y1":1}],"showlegend":false,"legend":{"bgcolor":"rgba(255,255,255,1)","bordercolor":"transparent","borderwidth":1.8897637795275593,"font":{"color":"rgba(0,0,0,1)","family":"","size":11.68949771689498}},"hovermode":"closest","barmode":"relative"},"config":{"doubleClick":"reset","modeBarButtonsToAdd":["hoverclosest","hovercompare"],"showSendToCloud":false},"source":"A","attrs":{"ec011c54745b":{"x":{},"y":{},"text":{},"type":"scatter"},"ec016ca310e4":{"x":{},"y":{},"text":{}}},"cur_data":"ec011c54745b","visdat":{"ec011c54745b":["function (y) ","x"],"ec016ca310e4":["function (y) ","x"]},"highlight":{"on":"plotly_click","persistent":false,"dynamic":false,"selectize":false,"opacityDim":0.20000000000000001,"selected":{"opacity":1},"debounce":0},"shinyEvents":["plotly_hover","plotly_click","plotly_selected","plotly_relayout","plotly_brushed","plotly_brushing","plotly_clickannotation","plotly_doubleclick","plotly_deselect","plotly_afterplot","plotly_sunburstclick"],"base_url":"https://plot.ly"},"evals":[],"jsHooks":[]}</script> --- # Not Unreasonable ``` r qqnorm(residuals(Model.EPL)) ``` <img src="index_files/figure-html/unnamed-chunk-45-1.svg" width="468" /> --- ## Residuals ``` r resid.plotter(Model.EPL) ``` <img src="index_files/figure-html/unnamed-chunk-46-1.svg" width="468" /> --- # Predicting Points ``` r head(predict(Model.EPL, newdata=data.frame(Wage.Bill.milGBP=seq(0,200,10)), interval = "confidence"), 10) ``` ``` fit lwr upr 1 32.11589 26.34036 37.89143 2 34.51820 29.26702 39.76939 3 36.92051 32.16859 41.67244 4 39.32282 35.03629 43.60936 5 41.72513 37.85788 45.59239 6 44.12744 40.61679 47.63809 7 46.52975 43.29225 49.76725 8 48.93206 45.86190 52.00222 9 51.33437 48.30814 54.36060 10 53.73668 50.62574 56.84762 ``` --- ## Some Concluding Remarks 1. It's all about squares. But really, it's all about *normals*. 2. Assessing normality is crucial to inference in linear models. But we cannot really **know** whether or not it holds. But if it does: a. Slopes and intercepts are `t`, as are their confidence intervals. b. Sums of squares have ratios defined by `F`. c. Predictions have `t` averages and `normal` intervals. d. **All founded upon the sum of squared errors being `\(\chi^2\)`.** 3. This core idea of **explaining variance** or unpacking a black box is the core question to virtually all *machine learning* and modelling. 4. [The capital asset pricing model.](https://shinytick-geiwez4tia-uc.a.run.app)